Every year, Google releases their end-of-year recap summarizing what they have done to fight spam.

In this year’s report, they highlighted their penchant for using AI to fight spam.

They also tout its effectiveness at catching both known and never-before-seen trends in search spam.

One of their statistics says that in 2020 they reduced spam by more than 80% compared to just a couple of years ago.

How Has Google Fought Spam ‘Smarter’?

Google explains their process for fighting spam, providing information about what happens behind-the-scenes:

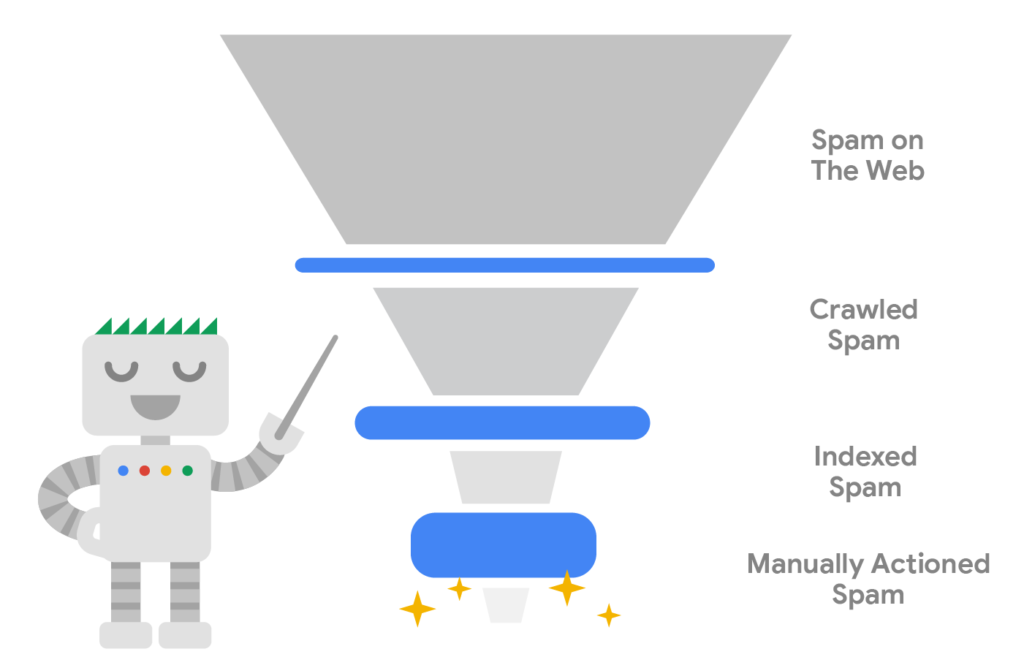

This diagram conceptualizes how we defend against spam.

First, we have systems that can detect spam when we crawl pages or other content. Crawling is when our automatic systems visit content and consider it for inclusion in the index we use to provide search results. Some content detected as spam isn’t added to the index.

These systems also work for content we discover through sitemaps and Search Console. For example, Search Console has a Request Indexing feature so creators can let us know about new pages that should be added quickly. We observed spammers hacking into vulnerable sites, pretending to be the owners of these sites, verifying themselves in the Search Console and using the tool to ask Google to crawl and index the many spammy pages they created. Using AI, we were able to pinpoint suspicious verifications and prevented spam URLs from getting into our index this way.

Next, we have systems that analyze the content that is included in our index. When you issue a search, they work to double-check if the content that matches might be spam. If so, that content won’t appear in the top search results. We also use this information to better improve our systems to prevent such spam from being included in the index at all.

The result is that very little spam actually makes it into the top results anyone sees for a search, thanks to our automated systems that are aided by AI. We estimated that these automated systems help keep more than 99% of visits from Search completely spam-free. As for the tiny percentage left, our teams take manual action and use the learnings from that to further improve our automated systems.

They also mention that they discover more than 40 billion spammy pages. That’s a ton of spam!

Among the types of spam that Google continuously protects against is hacked spam, or spam from sites that have been compromised by hackers.

One of the most common attacks they observed includes spammers hacking into sites that are vulnerable. By pretending to be the site’s owners, they verify themselves in Google Search Console, and bait the tool into crawling and indexing the spammy pages they made.

It’s not like this is the first time they have been using AI. In fact, they have been using AI for more than several years at least, especially with the introduction

How are Sites Compromised by Hackers?

Google explains that the following are the most common reasons that sites are hacked and compromised:

- stolen and/or compromised passwords;

- the owner does not install the security updates;

- themes and plugins that are not secure;

- social engineering;

- holes in the site’s security policies; and

- leaks of crucial data

This is why they note that:

When it comes to social engineering, it appears it’s not entirely ineffective:

They also note that poor security policies are the main reason why hackers are able to gain access to a website for malicious attacks.

If You See Something, Say Something

If you are aware of or have seen spam in Google’s search results, you may want to file a spam report.

Taking note of the precise query you’re using, along with any other identifying information about the spam you witness in the SERPs will be helpful.

You can find their spam report form here.