When you’re using Semrush’s Site Audit Warnings tool, you can be presented with three types of issues:

- Errors are the most severe and must be immediately addressed.

- Warnings are of moderate severity and should be rectified after any errors.

- Notices are issues that may not be creating problems but should still be further examined when possible.

Here, we’ll take a closer look at warning-level issues specifically. While not quite as urgent as their error-level counterparts, it’s still vital to promptly fix any warnings that may be affecting your site’s search engine results page (SERP) performance.

Semrush’s Site Audit Warnings Tool

As soon as you’ve addressed the errors uncovered by Semrush’s Site Audit, you’d be wise to start working on warnings. Though you don’t exactly need to fix them, you can absolutely benefit from doing so.

These are the most important warnings you may find in your Site Audit report.

Pages Don’t Have Meta Descriptions

It’s been known for years now that meta descriptions do not have a direct influence on rankings. They can, however, have an impact on conversions.

A well-written natural description will beat an auto-generated description every time, assuming that you tailor it to your audience and you are working to garner a positive response from them.

That’s because a customized description will tell users exactly what your page is about and entice them to click on it. But if a page doesn’t have a meta description, Google will automatically create one for it that may or may not be accurate.

Avoid turning away potential visitors with auto-generated descriptions by creating a unique one for every page. As you do, remember to keep your meta descriptions at 155 characters or less and always include the primary keyword.

The more effort you put into your meta descriptions while working with the preceding best practices, the better!

Your Pages Are Not Compressed

Semrush says that this warning is triggered when the Content-Encoding entity is not present in the response header.

What, exactly does this mean? As Mozilla explains, the Content-Encoding entity is responsible for compressing the media-type and telling browsers how to decode it. So, this warning means that your site’s pages aren’t being compressed or properly decoded, which translates to longer loading times and a worse user experience.

To remedy uncompressed pages, Google recommends enabling GZIP text compression, in part because all modern browsers both support and automatically request it.

Since proper page compression can have such a positive impact on the user experience, it’s an important optimization pain point to include in your best practices.

Links on Your HTTPS Pages Point to HTTP Pages

When links on newer HTTPS pages direct search engine crawlers to older HTTP pages, the search engines can find it difficult to determine whether they should rank the HTTPS or HTTP version.

Luckily, the fix for this problem is very simple: Make sure that all your site’s HTTP links are updated to HTTPS. That’s it!

If you’re unfamiliar with HTTPS and why you should use it for your site, Google has plenty of documentation on the topic.

Your Pages Have a Low Text-to-HTML Ratio

When we talk about text-to-HTML ratio, we’re talking about each page’s ratio of visible text to invisible HTML code. The former includes the text that’s actually shown to users, while the latter includes HTML elements that only website administrators can see.

A low text-to-HTML ratio, i.e. lots of HTML code but only a little text, is not a direct ranking factor—Google’s John Mueller confirmed as much in a 2016 Webmaster Central hangout.

However, a text-to-HTML ratio of ten percent or less could indicate possible HTML bloat, which in turn could be slowing down page speed and negatively affecting the user experience.

So if this warning is triggered in Semrush, be sure to test the affected pages’ speed and check for any extraneous or unminified HTML code.

It’s still a good idea to make your pages leaner in terms of code, especially when it concerns your users—faster pages will always make your users happier.

Your Images Don’t Have Alt Attributes

Images’ alt attributes, or alt text, serve to help both search engines and users with visual impairments understand their contents.

As such, images without alt text are missing out on opportunities to rank better with search engines and be more accessible to more users.

Within a page’s HTML code, alt text looks like this:

<img src=”IMAGE-FILE-NAME.JPG” alt=”ALT TEXT HERE”>

Always make your alt text as clear and descriptive as possible and keep it under 125 characters. By following these simple guidelines, you can improve your pages’ accessibility and rankings.

Pages Have a Low Word Count

As Semrush explains, this issue arises when any of your pages contain less than 200 words. This is because the amount of content on a page acts as a quality signal to search engines: It’s not necessary to have thousands of words on every page, but each page does need to have enough content to satisfy its main purpose.

As Google specifies in its Search Quality Rater guidelines, one of the attributes of a low-quality page is “an unsatisfying amount of main content for the purpose of the page.”

In other words, whether a page’s purpose is to report on a news story, describe a product or something else entirely, it needs to have enough content to achieve that purpose.

And while there’s no such thing as an ideal word count, a study from Backlinko and Buzzsumo found that on average, long-form content gets 77.2 percent more links than short-form content. So, focus on creating enough content for each page to fulfill its purpose, and aim for long-form content whenever possible and appropriate.

In the end, though, it all depends on the topic in question. While longer and more in-depth content may get more links, other intent-based search queries—such as those concerning money or transactions—may lend themselves better to short-form content.

Whether you create long- or short-form content, what’s most important is that it serves to achieve the page’s purpose in full.

Your Pages’ JavaScript and CSS Files Are Too Large

In Site Audit, this issue occurs when the total transfer size of the JavaScript and CSS files on a given page is larger than 2MB.

Like many warning-level issues, this doesn’t have a direct impact on your pages’ rankings. It can harm the user experience, though, which may lead to fewer satisfied visitors and lower SERP performance.

Such a scenario is possible because the larger a page’s JavaScript and CSS files, the longer it will take to load. Since page speed has a significant impact on the user experience, you should strive to keep each page’s files as lean as possible.

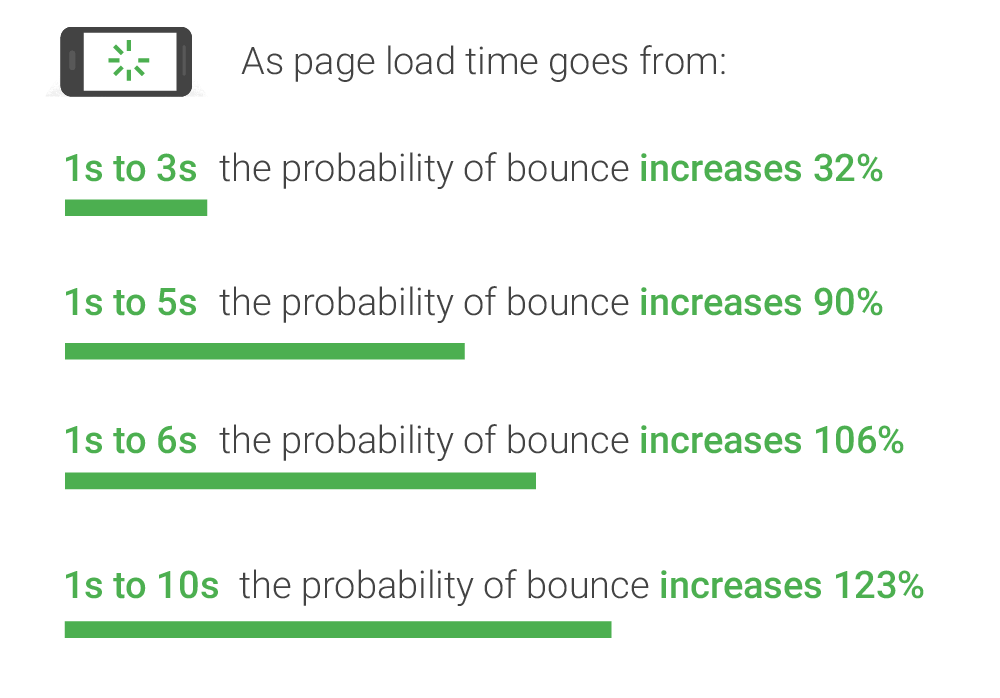

After all, as page load time moves from just one to three seconds, users’ probability of leaving the page altogether increases by 32 percent:

To avoid this, be sure to remove any unnecessary JavaScript and CSS files and keep your site’s plugins to a minimum.

You Have Unminified JavaScript and CSS Files

One way you can dramatically reduce the size of your pages’ JavaScript and CSS files is by minifying them. As such, Semrush will alert you if any of your site’s files haven’t been minified.

Not familiar with minification? As Mozilla puts it, “minification is the process of removing unnecessary or redundant data without affecting how a resource is processed by the browser.” This can mean removing things like white space, unused code and comments.

By removing nonessential elements such as those, you can help users’ browsers process and load each page’s JavaScript and CSS files more efficiently.

But I’m an SEO practitioner,” you might be saying. “I have no idea what the heck all this means. Do I really have to learn to code?” No, you don’t have to learn to code to be an excellent SEO. In fact, issues like these can be remedied with little to no coding knowledge.

If your site uses JavaScript and CSS files hosted on an external site, Semrush recommends asking the site’s owner to minify them.

If they’re hosted on your own site, you can simply use a browser-based minifier that does all the heavy lifting for you.

Your Site Contains Broken External Links

While broken external links won’t make or break your site’s performance, they can become a pain for users. No one wants to waste their time clicking on a link that doesn’t work, and your site’s visitors are no exception.

As such, multiple broken external links can degrade the user experience and may cause your site’s SERP rankings to drop as a result.

If the Site Audit tool reports any external links as broken, follow them yourself to see if they lead to a nonexistent page. If they do, replace the faulty links with functional ones. If they don’t, you may want to make the other site’s webmaster aware of the problem.

Your Site Contains Broken External Images

If your site contains images of any kind, chances are one or more of them will fail to appear at some point in time. This can happen because the image no longer exists, has been relocated to another URL or was linked to via an incorrect URL.

Whatever the case, broken external images aren’t appealing to viewers and therefore won’t be any help to your SERP rankings, either.

To fix a broken image, you can replace it with a new one, remove it altogether or change its URL to a correct one. And to improve the user experience when images do inevitably break, always include alt text for each image and consider serving a placeholder or default image instead.

Your Pages Have Too Much Text in the Title Tags

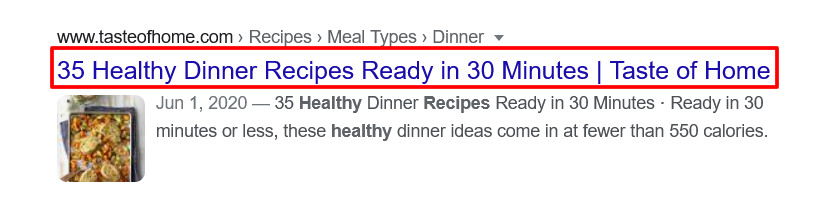

Just as with meta descriptions, you want your pages’ title tags (i.e. meta titles) to clearly communicate each one’s topic and purpose. This way, users can tell what your pages are about right from the SERPs, as is beautifully demonstrated by an article from Taste of Home:

But since Google tends to truncate title tags longer than 60 characters, some title tags may be too long for their own good.

So if your Site Audit report serves you this warning, be sure to trim each page’s title tag to an appropriate length while retaining as much detail and clarity as possible. And if you’re having trouble coming up with the perfect titles, refer to Google’s best page title practices for guidance.

Your Pages Don’t Have <h1> Headings

HTML heading tags, which move in decreasing order of importance from <h1> to <h6>, tell both readers and search engine bots how a page’s content is organized and what each section is about.

Since it signifies the greatest level of significance, each page’s <h1> heading should be intact and well thought-out. As Semrush warns, if a page has no h1 heading then search engines may rank it lower than they would have otherwise.

Be sure each page’s h1 tag is properly implemented, too. While developers may believe that heading tags can be used interchangeably, these tags lose their significance when they are implemented outside normal semantic meaning.

What is their semantic meaning, you might wonder? It means that these header tags are being used in their proper order:

- <h1> for the page title;

- <h2> for the first sub-headings throughout the document;

- <h3> for certain relevant subtopics;

- and so on through the <h6> tag.

Adhere to these guidelines and your site’s SERP rankings are sure to thank you.

Your Pages Have Duplicate <h1> and Title Tags

While using the same title in your <h1> tag and title tag may sound like a good idea, doing so can backfire when search engines view your page as being over-optimized or keyword-stuffed.

Plus, you’ll also be missing out on an opportunity to include a greater variety of keywords for search engines and users to see.

While some SEO practitioners think that it’s acceptable to use more than one h1 tag, we disagree. In our experience, the proper semantic, hierarchical structure should be used for content. That means <h1> tags for the page title and <h2> tags for sub-headings.

Just don’t duplicate your title tags and <h1> tags—make sure that you create unique content for both.

You Have Too Many On-Page Links

Here, Semrush will give you a warning if any pages have too many on-page links. Their magic number is 3,000.

I disagree. While it has been reported in the past that 100 links per page is the maximum number that you can have before causing problems with search engine crawlers, the reality is that there really is not much of a limit at all.

In a Google Search Central YouTube video, Matt Cutts explained that there is no concrete maximum amount of links, and webmasters should simply stick to “a reasonable number:”

Just keep in mind that the more links a page has, the less link equity each linked page will receive. So if you have Semrush’s upper limit of 3,000 links on one page, each link will only get one 3,000th of the original page’s authority.

So, I think it’s best to err on the side of caution and stick to a couple hundred links or less. Provided those links make sense and provide value to users, this will ensure there’s no chance of your site sending a spam signal to Google.

Your Pages Have Temporary Redirects

When a page has been temporarily moved to another location, it will return an HTTP status code indicating a temporary redirect, such as status 302.

Search engines will still index the redirected page, though, and no link equity will pass to the new page. In this way, unintentional temporary redirects can hurt your rankings and block link equity.

To ensure that all the pages you want to rank can do so, verify that any temporary redirects Semrush alerts you to are both intentional and appropriate. For instance, 302 redirects are useful when A/B testing, redesigning or updating the site or fixing a broken page.

And remember, 302 redirects are not a permanent solution. If you want to redirect users to a new page for good, simply use a 301 redirect instead.

Your URLs Have Too Many Parameters

This warning is triggered not just when your URLs have lots of parameters, but when they have any number more than one.

Semrush says this is because multiple parameters make URLs less appealing to visitors as well as more difficult for search engines to index.

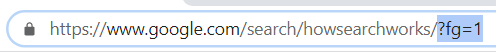

But what are URL parameters to begin with? As Google puts it, they allow you to track information about a click. They’re composed of a key and value separated by an equal sign (=) and joined by an ampersand (&), and the first parameter always follows a question mark (?). For example:

While such parameters can be genuinely useful (when keeping track of which platform users are coming from, for instance), too many can create problems.

Google states in its URL structure guidelines that excessively complex URLs, especially those containing several parameters, can hinder crawlers by “creating unnecessarily high numbers of URLs that point to identical or similar content on your site.”

The solution is to keep your URLs’ number of parameters to a minimum, and only use them if you know they’re necessary. If you do need to use multiple parameters, consider using Google’s URL Parameters tool to prevent its bots from crawling parameterized duplicate content.

You’re Missing Hreflang Tags and Lang Attributes

The Site Audit tool will automatically warn you if your pages don’t have either hreflang tags or lang attributes.

Both serve to tell search engines which language is being used on a given page, and both are essential for multilingual sites (if you don’t have a multilingual site, of course, there’s no need to implement either).

By implementing hreflang or lang, you’ll ensure that search engines are able to point users to the correct version of each page depending on their location and preferred language. As a bonus, you’ll also help search engines avoid categorizing your pages as duplicate content.

Want to create some hreflang tags as quickly as possible? Aleyda Solis’ handy hreflang generator is the perfect place to start.

There Is No Character Encoding Declared on Your Site

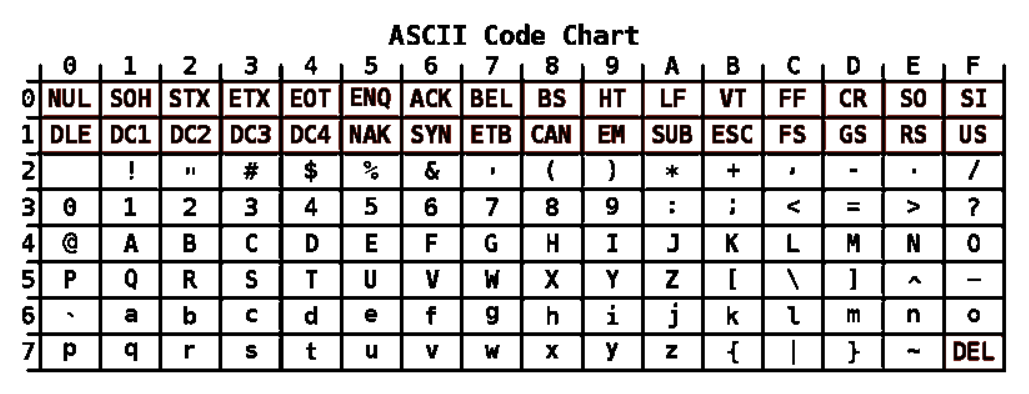

The purpose of character encoding is simple: to tell browsers how to display raw data as legible characters. This is typically done by assigning letters to numbers, as seen in this ASCII code chart:

Having such encoding in place is important because without it, browsers may not display pages’ text correctly. This can harm the user experience and result in lower search engine rankings.

As per Semrush, you can fix this issue either by specifying a character encoding in the charset parameter of the HTTP Content-Type header or using a meta charset attribute in your site’s HTML code.

Your Pages Don’t Have a Doctype Declared

When your page does not include a doctype, this causes your browser to enter what is called quirks mode. This is where the browser follows older coding, and will imitate what you would have achieved back in the late 1990s should you have used this non-standard method of coding.

You can avoid this scenario entirely by simply declaring a doctype. As W3Schools explains, the <!doctype> declaration is not an HTML tag—rather, it serves to tell the user’s browser what type of document it’s dealing with.

To ensure all your site’s pages display correctly, all you need to do is add a <!doctype> element to the top of every page source.

If you’re using HTML5, that element will look like <!doctype html> (the declaration is not case sensitive, so capitalize it any way you’d like).

Your Pages Use Flash

Yes, Flash is the shunned development platform of the digital age. It’s horribly clunky, it presents issues with crawling when not properly implemented, and it’s just inconvenient to have to update a third-party plugin six hundred thousand times.

While once invaluable for adding interactivity and animations to a site, its functions can now be accomplished with HTML5, rendering it entirely obsolete. This is especially true now that Adobe officially stopped supporting Flash as of December 31, 2020.

If your pages still use Flash, they:

- can’t be properly crawled or indexed;

- don’t perform as well as they would otherwise;

- don’t display properly on mobile devices; and

- pose a security risk to your site.

All in all, it’s just not worth it to keep Flash around. Avoid using Flash in the future, and remove it from wherever it exists on your site.

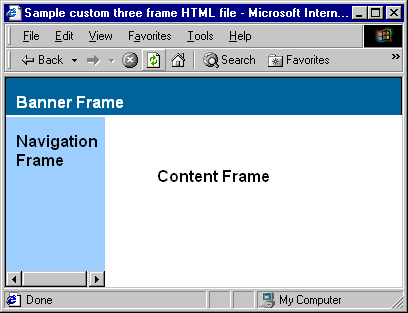

Your Pages Contain Frames

HTML frames used to be the bane of an SEO pro’s existence before HTML5. It seemed like every single website was rendered in frames, making for a clunky navigation experience. But, because it was the new “in” thing, everyone used frames to put together their website. A 2001 tutorial from IBM shows how frames could be used to organize a site’s layout:

But there’s a problem with new “in” things: They are seldom the best way to do things, and they can negate everything that SEOs try to achieve when optimizing a website.

Semrush agrees, saying that “<frame> tags are considered to be one of the most significant search engine optimization issues.”

The fix is quite simple—just don’t use frames! HTML5 doesn’t support them anyway, so both your site’s performance and user experience will be better for it.

Your URLs Have Underscores

There has always been a long-running debate about underscores (_) versus hyphens (-) in URLs. Which is better?

As Semrush says, search engines may consider words separated by an underscore to be one long word. With hyphens, though, each word will be viewed as distinct. That explains why Google itself recommends using hyphens over underscores.

So if you see this issue appear in your Site Audit report, simply remove any underscores from your URLs and replace them with hyphens.

Your Internal Links Contain the Nofollow Attribute

In the SEO world, the nofollow attribute counts as a downvote of the editorial capacity of a link. In other words, it tells search engines whether or not it is legitimate and whether you do (or do not) want to pass value.

Since in most cases you do want to pass value to all your internal links, Semrush will notify you if any of those links contain the nofollow attribute. If you see this warning, check to ensure that the attribute is there intentionally.

A good rule of thumb? If you don’t have a very good reason for using the nofollow attribute, don’t use it at all.

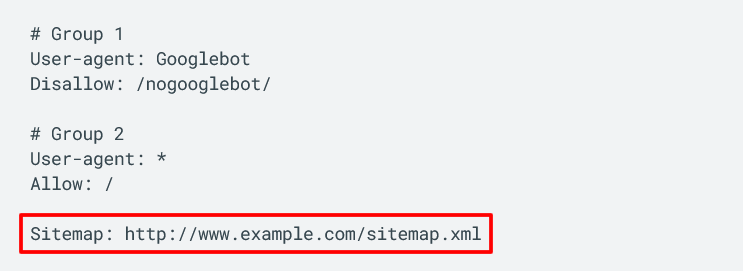

Your Sitemap Is Not Indicated in Your Robots.txt File

Semrush will display a warning if your robots.txt file doesn’t contain a link to your sitemap. This is because when you do include such a link, it’s easier for search engine bots to understand which pages they should crawl and how to navigate to them.

You can fix this issue by, you guessed it, adding a sitemap directive to your robots.txt file. When you have, it will look something like this example from Google’s documentation:

As you can see, all it takes is a simple link to help make your site even more crawlable than it was before.

You Don’t Have a Sitemap

Even worse than not having a sitemap linked in your robots.txt file is not having a sitemap at all. That’s because a sitemap acts as a directory of all your site’s pages that search engine bots can use to achieve more efficient crawling and indexing.

So if Semrush alerts you that no sitemap file can be found, it would be worth your time to create one. To get started, read Google’s guidelines on building and submitting a sitemap. Note that while sitemap files are commonly referred to as sitemap.xml files, you don’t have to use the XML format. If you wish, you can instead use:

- an RSS, mRSS and Atom 1.0 feed URL;

- a basic text file; or

- a sitemap that’s been automatically generated by Google Sites.

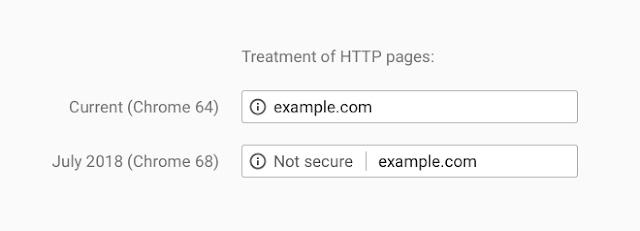

Your Homepage Lacks HTTPS Encryption

For quite a few years now, Hypertext Transfer Protocol Secure (HTTPS) has been a ranking signal in Google’s algorithms. It was in 2018, however, that HTTPS really became a significant attribute of Google’s Chrome browser. This was when Google began labelling all HTTPS sites as not secure with the release of Chrome version 68:

As a result, Semrush says that websites without HTTPS support may rank lower on Google’s SERPs, while those that do support HTTPS tend to rank higher.

So if you haven’t already switched your site from HTTP to HTTPS, take a look at Google’s guide on how to do so.

As with other SEO efforts, it’s important to assess your situation, test what’s right for your site and move forward from there if it makes the most sense for you.

Your Subdomains Don’t Support SNI

Server Name Indication, or SNI for short, is an extension for the widely-used Transport Layer Security (TLS) encryption protocol. With SNI, a server can securely host multiple TLS certificates for several different sites under just one IP address.

This sounds awfully technical, but it all boils down to a simple idea: As Semrush puts it, using SNI “may improve security and trust.” What’s not to like about that?

To fix this issue, all you need to do is implement SNI support for your subdomains. If you don’t know how, contact your site’s security certificate provider or ask the nearest network security specialist.

Your Robots.txt File Contains Blocked Internal Resources

CSS, JavaScript, image and video files are all examples of internal resources that can be blocked within a site’s robots.txt file through the use of the disallow directive.

When that directive is in place, search engines are unable to access the affected files, and are therefore unable to crawl, index and rank them, too.

Usually, unless you have a very specific situation that requires it, there are no reasons to block internal resources in your robots.txt file.

If you receive this warning from Semrush, you may want to confirm that the resources in question are indeed blocked by using Google’s URL Inspection tool. If they are blocked and you don’t have a concrete reason for doing so, remove the disallow directive to re-enable crawling and indexing.

Your JavaScript and CSS Files Are Not Compressed

Just as page compression can improve page speed and your site’s performance, so too can the compression of JavaScript and CSS files.

As Semrush says, compressing JavaScript and CSS files “significantly reduces their size as well as the overall size of your webpage, thus improving your page load time.”

If your site contains JavaScript and CSS files hosted on another site, contact that site’s webmaster and request that the files be compressed. If you’re able to compress the files yourself, though, by all means do so.

Useful tools for quickly and easily enabling compression include JSCompress and CSS Compressor, both of which only require a simple copy and paste.

Your JavaScript and CSS Files Are Not Cached

We’re not done with JavaScript and CSS files just yet. If browser caching isn’t specified in your page’s response header, users’ browsers won’t be able to cache and reuse JavaScript and CSS files without having to reload them completely.

In other words, uncached JavaScript and CSS files equals slower load times and a worse user experience.

If your site uses JavaScript and CSS files hosted on an external site, then you’ll once again need to contact the site’s webmaster and request they be cached. If your site hosts its own files, though, simply enable caching in the response header.

Your Pages Have Too Many JavaScript and CSS Files

Even if all your site’s JavaScript and CSS files are both compressed and cached, your pages’ performance can be negatively affected if there are too many of those files to begin with.

While Semrush will display a warning if a page has more than 100 JavaScript and/or CSS files, you’d be wise to have far fewer than that. After all, for each file a page has the user’s browser has to send one more request to the server, thereby slowing down the page speed with each additional file.

So, pare down your pages’ JavaScript and CSS files wherever possible. For instance, you can start by removing any unnecessary WordPress plugins from your site.

Heed Site Audit’s Warnings for Better Rankings

When Semrush delivers warnings in its Site Audit report, it doesn’t mean you need to act fast—that’s only true of error-level issues. But if you take the time to dig into your site’s warnings and make prudent changes as needed, you stand to gain a significant amount of traffic and drastically improve the user experience.

For that reason, don’t view Site Audit warnings as urgent problems. Instead, view each one as an opportunity to get more (and happier) visitors.

Image credits

Think with Google / February 2018

Screenshots by iloveseo.com / January 2021

Scott Granneman / Retrieved January 2021

IBM / April 2001

Google Search Central / January 2021

Google Security Blog / February 2018