You need the right technical SEO best practices to improve your website’s architecture and performance. This isn’t rocket science; it’s more like an intricate dance of optimization that will help your site soar higher than ever – if done correctly! This article will give you the low-down on improving your website’s structure and performance with simple yet effective technical SEO strategies. So please put on your dancing shoes; let’s explore these best practices together.

Technical SEO is all about ensuring search engines can easily crawl, index, and rank your website to provide relevant search results for users. It involves optimizing websites from both a content and code perspective to ensure they are optimized properly. When implemented correctly, these strategies can increase organic traffic, improve user experience, and better rankings in SERPs (search engine results pages).

By following the steps outlined within this article, you can take advantage of the full potential of Technical SEO and ultimately increase ranking visibility while providing visitors with a seamless user experience across devices. Whether you’re just starting out or have been working on SEO for years, there’s something here for everyone – so read on!

Definition Of Technical SEO – Things You Need To Know

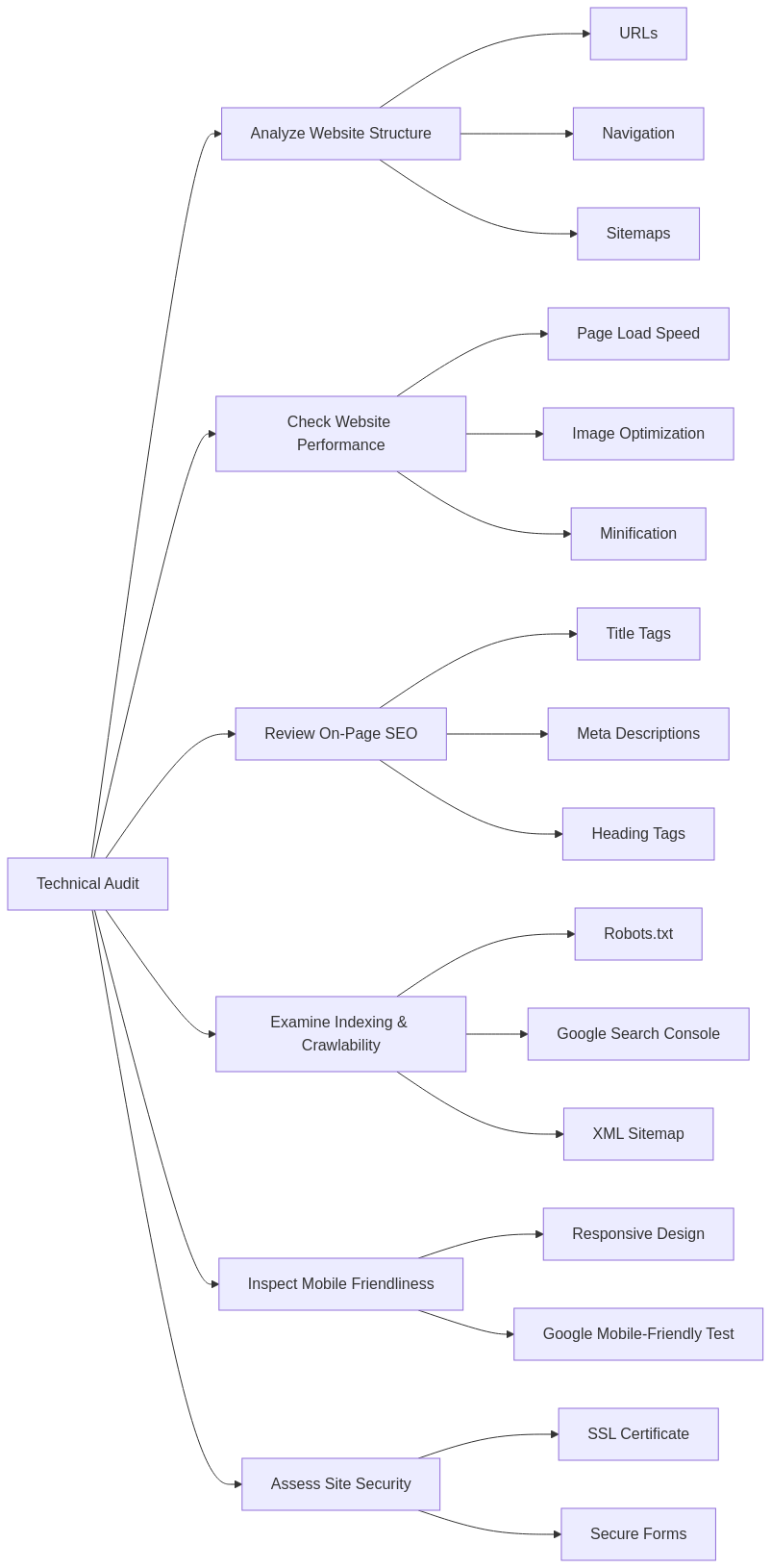

Technical SEO is a specialized search engine optimization (SEO) that optimizes website architecture and performance. It’s all about ensuring that search engines can easily crawl, index, and understand the website to improve its visibility in organic search results. Technical SEO covers activities such as creating an XML sitemap, setting up redirects, URL structure optimization, and more.

The primary goal of technical SEO is to ensure that webpages are accessible and easy for crawlers to understand so they can accurately index them in SERPs. This helps increase organic rankings since it allows search engines to quickly identify content on the page and assess how relevant it is compared to other websites. Additionally, when implemented correctly, technical SEO provides users with a better experience; faster loading times, fewer errors, and easier navigation – all these factors contribute towards higher rankings in SERPs.

It is important for any business or individual who wants their site to rank well organically to carry out technical SEO best practices regularly. Without doing this, you could end up with a poorly performing website, resulting in lower visitor engagement levels than expected and, ultimately, less traffic from natural sources and referrals.

In short, technical SEO should always be part of your overall strategy if you want your website or online presence to drive maximum returns from organic traffic channels! Moving forward, we’ll discuss specific aspects related to crawling & indexing that play important roles in improving visibility within SERPs.

Google Website Crawling And Indexing

Ah, website crawling and indexing; are the lifeblood of SEO. It can make or break your SEO efforts depending on how well you plan and optimize them. But fear not! You’ll be well on your way to improved performance with a few simple tips.

First off, let’s start with Google crawl optimization. This is essential for ensuring that search engines can find all pages within your site efficiently to index them properly in their databases. To achieve this, you want to minimize duplicate content issues and ensure no broken links are on your page. Use robots’ meta tags appropriately and create an XML sitemap file for submission when needed.

Next comes Google index optimization, which should include having a good internal linking structure between pages and proper usage of heading tags (H1-H6) throughout each page. You must also pay attention to keyword density and ensure keywords are used naturally within titles and headings instead of stuffing them into the body copy where possible. Lastly, focus on optimizing image alt attributes since these are important for helping search engine crawlers understand what images represent within the web pages during their analysis process.

Regarding best practices for crawling and indexing, ensuring URL parameters don’t block resources from being crawled by default is very important. Also, consider using canonical URLs if multiple page versions exist and avoid creating too many redirects since this could hinder the crawling speed of certain parts of the website – especially relevant when dealing with mobile sites separately from desktop ones!

To move forward effectively in our technical SEO journey, keyword research and usage must come next…

Keyword Research And Usage For Google SERPs

When it comes to SEO, keyword research and usage optimization are essential. Successful optimization requires understanding the search terms your customers use when looking for information related to your industry. Identifying these keywords will enable you to target long-tail keywords and improve website visibility in Google organic searches.

The first step is to conduct a comprehensive audit of your existing content and look for opportunities to optimize using relevant keywords. This involves evaluating titles, meta descriptions, headlines, and other components of each page on your domain. You should also assess how closely aligned individual pages are with specific topics or themes, which can help inform future keyword selection.

Once you have identified potential keywords and evaluated their relevance, you must consider how they fit into the overall architecture of your website. Incorporating them into page content naturally while ensuring good readability is important; this includes optimizing images with alt tags containing targeted keywords where appropriate. Additionally, it’s beneficial to include internal links between the various pages so that users can navigate more easily through your site hierarchy, which helps promote better SEO performance over time.

Finally, tracking metrics such as click-through rate (CTR) across different web pages allows us to measure our progress against goals over time, providing insight into what works best regarding keyword usage optimization strategy. With this data, we can continually refine our approaches based on user behavior and market demand shifts to create an effective online presence for our brand. As we explore internal link structure next, remember the importance of continually monitoring results for maximum success.

Why Is Your Sites Internal Link Structure So Important?

“A stitch in time saves nine.” The same can be applied to SEO: a well-structured internal link structure is essential for good website architecture and performance. Internal links are key to providing your users with an optimal experience and helping search engine bots crawl the site more effectively. Moreover, they help distribute page authority throughout your domain, thus boosting rankings.

When creating an effective internal linking strategy, it’s important to consider user intent first. Links should tell users what pages they will visit when clicked on, using descriptive anchor text that makes sense in the context of surrounding content. Additionally, don’t overdo it – too many links may overwhelm visitors or create confusion. You must also ensure you’re linking back to relevant pages within the same domain; avoid offsite links unless absolutely necessary.

All your internal links must also be functional – broken links frustrate users and negatively impact your SEO ranking. To ensure this isn’t an issue, use automated tools like Google Search Console or Screaming Frog regularly to audit your site for any errors or issues related to internal linking. Lastly, make sure you have proper tracking set up so you can monitor how people interact with your website after clicking through from one page to another.

By following these simple tips and tricks, you can easily optimize and improve your website’s internal link structure – leading to better overall performance! With a solid foundation in place, we now turn our attention toward URL optimization…

URL Optimization – Help Your Site Make Sense

Now that we’ve discussed internal link structure let’s explore the topic of URL optimization and how it can help improve your website’s architecture and performance.

One way to properly optimize URLs for SEO is by ensuring they are simple and intuitively organized. This helps search engines more easily crawl through them, resulting in better indexation. Using descriptive keywords within the URLs also aids with SEO rankings. It also makes them easier to remember and share across channels, increasing traffic potential.

Moreover, optimizing addresses is a crucial part of successful SEO performance. By ensuring all addresses have unique page titles, meta descriptions, headings, images, and other important elements, you ensure users and search engine crawlers understand each one’s purpose without confusion.

Here are some key aspects to consider when optimizing URLs for Technical SEO:

- Avoid having too many folders/subfolders in the same address, making navigation difficult.

- Ensure each folder has its name rather than just numbers or symbols.

- Always use hyphens instead of underscores between words, increasing readability for both humans & bots.

- Use short but meaningful words instead of lengthy phrases to reduce character length.

Optimizing website structure thus plays an integral role in improving overall website performance – from boosting organic visibility to fostering user engagement rates. Therefore it pays off to invest time into getting these details right from the start, so you don’t encounter problems down the line! Let’s now move on to exploring site speed optimization strategies…

Site Speed Optimization – Faster is Better!

It’s essential to optimize your website for speed. Site speed optimization is an important part of technical SEO success and should be a priority when developing or updating a site. The faster the page loading time, the better the user experience resulting in higher rankings. This can be achieved through several methods, such as optimizing images and reducing redirects.

A starting point would be to perform a site speed analysis of the current performance of web pages on both desktop and mobile devices. Analyzing this data gives insight into where improvements are needed, allowing you to prioritize tasks accordingly. You may find that changes to HTML markup, caching strategies, minifying scripts, and external resources must be made to improve overall web performance optimization.

Furthermore, it’s also beneficial to use tools like Google PageSpeed Insights, which provide actionable recommendations on further enhancing the core elements impacting page load times, including server response time, memory consumption, and browser rendering issues. Additionally, leveraging AMP technology (Accelerated Mobile Pages) can help reduce download times significantly – thus improving user engagement with content hosted by the site directly or indirectly via social media channels, etc.

Finally, employing the abovementioned techniques will improve search engine visibility and produce tangible benefits for users who depend on optimal experiences during their online journeys – leading them towards more conversions from organic sources rather than paid campaigns alone. To ensure your website provides maximum value for all visitors regardless of device type or connection quality, assessing mobile usability should come next.

Mobile Usability – Make It Fit Your Screen

A sleek website design is essential, but how does it hold up on mobile devices? Usability has become a key factor in optimizing any website’s architecture. Mobile usability is important when designing your site to ensure that all users have access to the same content and functionality regardless of their device.

The importance of considering mobile usability cannot be understated. As more and more people use their phones or tablets to browse online, you must ensure that your website caters to these audiences too. It’s not just about user experience either; Google now considers the loading speed and overall performance of websites on mobile devices when ranking them in search results.

Optimizing a website for mobile devices requires different considerations than desktop versions, such as page size, font size, tap targets, navigation styles, and loading times. To get ahead of the competition in this area, consider implementing features like accelerated mobile pages (AMP), which help increase page load time by stripping out unnecessary code from webpages before serving them to visitors.

Mobile optimization should also ensure all images are correctly sized, so they don’t take too long to load. This can significantly improve the user experience while helping boost organic rankings simultaneously. With proper planning and implementation, optimizing your website’s mobile usability can help bring better visibility and engagement with potential customers without sacrificing quality or performance.

Structured data markup provides context around certain elements on your webpage to help search engines understand what information they contain. Knowing how to use structured data properly is essential for maximum SEO impact across desktop and mobile platforms.

Structured Data Markup – Schema

Now that we have discussed mobile usability, it’s time to focus on Structured Data Markup. This type of SEO helps search engines understand the content and structure of your website better so they can serve up relevant results for users. Structured data markup is an important part of technical SEO best practices because it allows you to create rich snippets in SERPs which can help improve click-through rates from organic search results.

| Benefits | Examples |

|---|---|

| Organize | Schema/JSON-LD markups |

| Enhance | Rich Snippets such as Star Ratings & Reviews |

| Optimize | Long Tail Keywords & Meta Descriptions |

Structured data markup facilitates better indexing of webpages by providing information about the page’s contents and their relatedness. It also provides more accurate descriptions when displaying a webpage in SERP (Search Engine Results Pages). Furthermore, it enables websites to appear in Google’s featured snippet section at the top of SERP, thus increasing visibility and click-through rate.

For example, implementing structured data markup with long tail keywords will allow search engine crawlers to identify those terms easily when searching for them. Additionally, schema markups such as JSON-LD can make meta descriptions stand out more prominently than regular text versions allowing potential customers to quickly get a gist of what the page is about before clicking through. Finally, including ratings or reviews within the code will enable stars and other visuals to appear beside website listings, making them much more appealing to visitors who scan down their options on SERP.

Using structured data marks can bring numerous advantages; however, one must consider several things before implementation. Such considerations range from ensuring accuracy regarding any facts mentioned in the metadata description to compliance with applicable rules and regulations set by governing bodies like GDPR (General Data Protection Regulation). Keeping these points in mind while creating a well-thought-out implementation plan will ensure maximum benefit from structural data marking without legal issues. With this knowledge, let us move on to image optimization – another key component of successful technical SEO strategies.

Image Optimization – Compress and Squeeze Those Pics

“A picture paints a thousand words.” This adage is especially true for SEO optimization. Image optimization is important in website performance and architecture, as it can improve user experience and search engine rankings. Here are some best practices for optimizing images:

- Image Compression

- Reduce file size by compressing the image file format (e.g., JPEG)

- Utilize online tools such as TinyPNG or Kraken to compress large files

- Image Sizes

- Use standardized sizes for all images on your website.

- If possible, use vector graphics instead of rasterized images so that they remain crisp across browser screens with different resolutions.

- Alt Tags

- Include relevant alt tags that accurately describe each image’s content and purpose.

- Avoid keyword stuffing; keep it concise but descriptive!

Image optimization should be thoughtfully implemented into any website design strategy to maximize SEO potential. Next up is canonicalization – this vital process ensures that URLs point to the right page without creating duplicate pages.

Canonicalization – Letting Google Know What Is Important

Canonicalization is an important SEO practice that helps manage duplicate content and prevent URL standardization issues. It’s a process of taking multiple URL versions and pointing them to the same page, thus consolidating any associated signals from search engines into one single version. This also prevents situations where different URLs are used for what should be seen by search engines as identical pages with identical content. You can ensure your website architecture is optimized for performance through canonicalization, resulting in better rankings and higher visibility in SERPs.

The main purpose of canonicalization is to help reduce or eliminate duplication on websites by normalizing all the URLs into one single version so that they can be accessed easily. When implemented correctly, it will improve user experience since visitors won’t find themselves stuck in endless redirection loops while navigating your site. Additionally, this technique assists with preventing penalties due to duplication issues when crawling and indexing your web pages.

When utilizing canonicalization techniques on your website, there are some best practices you’ll want to follow, such as avoiding abusive redirects and self-referencing canonical, which could result in poor performance within SERP results if not done properly. Also, remember that 301 permanent redirects should always be preferred over 302 temporary redirects whenever possible, as they send stronger signals and closer link equity between two pages more effectively than their counterparts do.

By implementing proper canonicalization strategies on your website, you’ll have peace of mind knowing that search engine algorithms view each page uniquely without the risk of being penalized for duplication errors or getting lost among numerous competing URLs from other sites vying for attention online. With these considerations taken care of, we can move on to creating and submitting sitemaps – another vital step toward optimizing our website’s performance!

Sitemap Creation And Submission

The next technical SEO best practices step is sitemap creation and submission. A successful website architecture requires an up-to-date and well-structured sitemap that can be used as a guide for search engine crawlers, helping them to index your content more effectively. To ensure you completely understand this process, we will discuss how to create and submit an optimized sitemap, analyze its performance, and build it correctly.

| Step | Description |

|---|---|

| 1 | Create Sitemaps with XML or HTML formats based on the size of your site |

| 2 | Submit your sitemaps to Google Search Console & Bing Webmaster tools |

| 3 | Monitor & Optimize: Analyze the traffic from organic sources & make changes if needed |

| 4 | Build Correctly: Make sure URLs are valid & navigate properly between pages on your site |

Creating accurately structured XML or HTML formatted sitemaps is essential for optimizing website structure for search engines. Using these files helps search engine crawlers find all the important resources within a website much faster than before. Once completed, submitting these documents to both Google Search Console and Bing Webmaster Tools should be done so they are available for crawling by each platform’s respective bots. This will also give you better insights into how the two platforms view your web pages’ optimization level. Afterward, monitoring and optimizing your submitted sitemaps should be taken seriously since analytics provided by these services can help identify any issues related to crawling ability, such as broken links or duplicate content problems. Lastly, building out carefully constructed redirects between different pages ensures that users don’t get stuck navigating through dead ends while browsing your site – something beneficial for SEO and the overall user experience.

To further enhance a website’s visibility online, robot exclusion protocols must also be configured accordingly, given their importance regarding what crawlers may or may not index from specific areas on a page/site – making it another crucial piece of the puzzle put together effectively SEO strategies.

Robots Exclusion Protocols

Robots Exclusion Protocols (REP) are essential tools in technical SEO. REP allows website owners to control which areas of their websites can be accessed by web crawlers, search engine spiders, and other automated agents. These protocols help keep a website secure from malicious content scraping or indexing that could harm its performance.

Here are three key points about robots exclusion protocols:

- Robots.txt is the main file for controlling access to a website via REP.

- Robot-exclusion directives must be clearly defined and communicated to crawlers and other automation agents with precision and accuracy.

- The robot protocols should be regularly monitored and updated when necessary due to changes in your site’s architecture or technology updates on the web infrastructure level.

For optimal security protection, it’s important to ensure that all exclusion protocols have been correctly implemented across every website page, including any subdomains or microsites you may have connected with the primary domain URL. Regularly checking all robot directives will help maintain overall security on your website and protect against potential cyber threats like malware injections or scrapers attempting to steal valuable data from your online presence. Additionally, ensure no broken links are caused by incorrectly written rulesets within the robot’s text file; these could lead to more serious issues if not addressed properly over time.

It’s also worth noting that some search engines like Google offer various options for customizing how crawlers interact with certain sections of a website using their own proprietary parameters like “noindex” tags, allowing even greater levels of control over what gets indexed into search results pages without having to rely solely on REP alone. To maximize the efficiency and effectiveness of both REP and customized crawling instructions, reviewing them together periodically is highly recommended for best practices in protecting one’s digital assets while optimizing visibility through organic search traffic channels simultaneously. With this knowledge under our belt, we can now move on to evaluating and enhancing the security posture of our websites moving forward.

Security Evaluation And Enhancement – Keeping It Safe!

Securing a website is paramount for any technical SEO project. Security evaluation and enhancement should be the first step in improving website performance. Through security protocols, vulnerability scans, and other measures, it’s possible to identify potential risks and take action to mitigate them before they become an issue.

The best way to assess website security is by evaluating existing security policies and software configurations. This can give insight into areas needing improvement or further protection against malicious attacks. In addition, performing regular updates of current programs will help ensure that all data remains safe from exploitation.

Furthermore, staying updated with industry-standard encryption techniques like SHA-256 and TLS/SSL certificates and implementing firewalls and intrusion detection systems (IDS) to monitor suspicious activity is important. Additionally, websites should also have secure login methods such as two-factor authentication (2FA), CAPTCHAs, or biometric scanners set up to protect user information.

Finally, organizations should invest in continuous monitoring through automated scanners to identify emerging vulnerabilities quickly before hackers can exploit them. By proactively addressing these issues ahead of time, businesses can rest assured knowing their site is protected against malicious threats while maintaining optimal web performance standards. With this foundation of comprehensive security in place, we can move on to content optimization strategies in the next section.

Content Optimization – Natural Language Processing

Now that your website has been secured and evaluated, optimizing content for better search engine performance is the next step. Content optimization consists of various strategies and techniques designed to improve the visibility of web pages in organic search results. To ensure optimal results, it’s important to understand how these tactics can be used together to maximize SEO potential.

First, use keywords throughout all text on the page. This will help crawlers identify what topics are being discussed and rank those pages higher in search engine result pages (SERPs). Keywords should appear naturally within context to not disrupt readability; stuffing them into sentences won’t do any good! Additionally, ensure titles accurately reflect the page’s contents – this helps users and search engines find relevant information quickly.

Next up is optimizing images with appropriate file names, captions, and alternative texts – also known as ALT tags. These attributes provide helpful hints about an image’s context, allowing crawlers to index them correctly for SERP rankings. Furthermore, adding internal links between related articles will create a network of interconnected pieces that encourages repeat visitors by providing more material from your site. Lastly, take advantage of third-party tools like Google Search Console or Bing Webmaster Tools to learn more about how your content is performing online.

By following these steps, you’ll be able to craft content that meets user needs while still adhering to best practices for SEO success. You can immediately start seeing organic traffic improvements with accurate keyword usage, optimized images, and internal linking! Now that you have implemented some basic content optimization measures, it’s time to focus on monitoring SEO performance…

Monitoring SEO Performance – Getting The Fruits Of Your Labor

Monitoring SEO performance is key for any website to stay ahead of the competition and achieve organic ranking success. It’s important to track your website’s search engine visibility and measure its overall performance. Doing so will help you identify areas that need improvement or additional optimization. Let’s look at some popular methods for monitoring a website’s SEO performance:

| Website Tracking | Search Engine | Website Analytics | |

|---|---|---|---|

| Tools | Google Analytics | Bing Webmaster Tools | Google Search Console |

| Purpose | Measure Traffic & Performance | Monitor Indexed Pages & Crawl Errors | Track Rankings & Queries with Keywords |

Google Analytics can measure traffic levels, user engagement, and other important metrics related to site performance. Additionally, it provides insights into keywords driving users to your pages and how they interact with each page’s content. Meanwhile, Bing Webmaster Tools allows you to monitor indexed pages on the search engine and detect crawl errors that might affect rankings in their index. Last, Google Search Console gives you access to valuable data about queries people use when finding your website through keyword searches. This tool also helps you determine which query generated more clicks and analyze how much time visitors spend on a page before leaving the site.

With all these tools in hand, webmasters gain valuable insight into how their websites perform on different search engines such as Google or Bing, what type of content resonates best with users, which keywords drive the most traffic to their sites, and many other aspects affecting SEO success. Having this information makes it easier for them to spot opportunities for growth and make necessary improvements more efficiently. So if you’re serious about improving your website’s architecture and performance, tracking SEO progress should become essential to your day-to-day operations.

Frequently Asked Questions

What Is The Best Way To Handle Duplicate Content?

Did you know that the percentage of websites with duplicate content is estimated to be at least 30%? This begs the question, what is the best way to handle duplicate content? One of the most important elements in a successful SEO strategy is managing and avoiding duplicate content. Here we will discuss some effective strategies for tackling this issue.

When it comes to dealing with duplicate content, there are several solutions available. The key lies in developing a comprehensive plan and implementing it effectively. A good starting point would be to use 301 redirects or canonical tags when possible – they can help ensure search engines only index one version of your web page, thus preventing potential penalties due to duplication. Additionally, you should prevent any accidental creation of new versions of existing pages by ensuring your URLs are concise and consistent across all platforms. Furthermore, if needed, you could also block certain sections of your website from appearing in search engine results via a robots.txt file or meta robots tag.

The next step would be to create a detailed audit of your site’s architecture so that you have visibility into how each piece fits within its overall structure. You should then identify areas with overlapping information or unnecessary repetition that can lead to an unwieldy amount of duplicated material on your site and rank-reducing algorithm penalties from Google and other major search engines. To ensure every part works together efficiently, you may need to optimize internal linking structures and navigation menus, making them user-friendly while still conforming to SEO best practices such as keyword usage and proper anchor text formatting.

Here are some additional tips for handling duplicate content:

- Utilize cross-domain canonicalization where applicable

- Use unique titles & descriptions for every page

- Monitor changes regularly using analytics tools like Google Search Console

- Take advantage of social media sharing features (LinkedIn, Twitter, etc.)

By following these strategies and investing time in understanding how different pieces work together, businesses can avoid costly penalties while optimizing their sites for maximum performance benefits. If done properly, the result will be increased traffic and improved rankings – essential for success in today’s digital landscape!

How Do I Optimize Content For Voice Search?

Voice search optimization is a key element of online success. As technology evolves, companies must stay ahead of the trends to remain competitive. To optimize content effectively for voice search, businesses need to understand how it works and what techniques they can use to maximize their results.

When optimizing content for voice search, several best practices should be incorporated. First and foremost, website owners should focus on creating unique content tailored specifically for this type of search engine optimization (SEO). This includes considering query intent, natural language processing, and local listings management. Additionally, webmasters should ensure that their page titles include keywords relevant to the user’s query and that structured data markup is used if possible.

To improve performance with voice search optimization tips, website owners should also consider utilizing tools like Google Search Console or Bing Webmaster Tools. These platforms provide valuable insight into how users interact with your site through voice searches and allow you to make informed decisions about which pages or topics require more attention from an SEO perspective. Furthermore, these services will help identify potential indexing speed or loading times issues that could negatively affect rankings.

Finally, businesses need to keep up with changes in the industry by staying abreast of new strategies related to voice search optimization. For example, some websites have begun implementing featured snippets – short paragraphs providing quick answers – to drive better click-through rates from organic traffic sources when users conduct queries via speech recognition software. By leveraging innovative tactics such as this one alongside other traditional SEO methods, webmasters can increase visibility for their brand in both organic and paid channels – ultimately resulting in increased revenue over time.

How Can I Measure The ROI Of The SEO Efforts Of My Site?

Have you ever wondered how to measure your SEO efforts’ ROI (ROI)? When tracking and understanding the effectiveness of search engine optimization, several steps must be taken to ensure success.

The first step is to take a look at your website analytics. This will help you understand where organic traffic comes from and other metrics such as page views, bounce rate, time spent on site, etc. You can then use this information to identify areas for improvement or opportunities for further optimization. Additionally, by keeping track of these metrics over time, you’ll have a better idea of what strategies are working and which aren’t.

Next, if possible, set up an SEO tracking system so that you can monitor performance more closely.

To do this effectively, you should focus on key performance indicators (KPIs) such as:

- Organic Traffic:

- Number of visitors

- Pages per visit

- Average session duration

- Conversion Rate:

- Percent of visitors who complete goals/objectives

- Shopping cart abandonment rate

By monitoring your KPIs regularly, you’ll be able to determine whether or not your efforts have the desired effect and make adjustments accordingly. This will also allow you to assess the ROI of each individual strategy so that you can optimize accordingly and maximize results.

Finally, it’s important to remember that SEO optimization isn’t just about increasing rankings; it’s also about providing value through content creation and link-building activities to drive organic growth. If done correctly, these tactics can provide long-term benefits for your business and improve overall ROI. Keep testing different approaches until you find the best combination for your needs!

What Are The Best Seo Tools To Use?

When it comes to SEO, a variety of tools are available for website owners and marketers. Knowing which are best suited for your needs is key when improving the performance of a website’s architecture and technical SEO efforts. This article will discuss the best SEO tools to optimize any website.

An SEO analysis tool is one of the most essential pieces of software an SEO specialist should have. With such a program, you can easily identify what areas need improvement on your site and where potential gains or losses could occur from certain changes. These results can provide valuable insight into what keywords would yield better visibility and higher rankings on search engines like Google.

Another important element of SEO success is keyword research. A good keyword research tool will help you uncover which terms users are searching for related to your niche and industry so that you can create content targeted toward them and gain organic traffic from those queries. Additionally, with such data, you’ll know exactly how competitive each term is – giving you invaluable insights into which topics may offer more opportunities than others!

Furthermore, understanding who is linking to your website (and why) is just as vital as knowing what keywords people are using to find it; after all, backlinks act as votes of confidence in the eyes of search engine algorithms! Access to an effective backlink analysis tool makes it much easier to understand where these links come from (and whether they’re beneficial or detrimental). It also allows one to monitor competitors’ link profiles, highlighting areas where improvements can be made over time.

Lastly, performing periodic site audits helps ensure that no issues go unnoticed when optimizing a website’s architecture and performance for search engine crawlers. This process involves checking for broken links/images; identifying pages with thin content; reviewing page titles & meta descriptions; scanning source code; etc. All these tasks become significantly simpler through high-quality site audit software – ensuring nothing slips past our attention during implementation!

In short, by leveraging comprehensive SEO tools like analysis programs, keyword research utilities, backlink analyzers, and site auditing software – any webmaster or marketer has everything they need to improve their website’s architecture and performance for maximum SEO effectiveness!

How Do I Use Seo To Drive Traffic To My Website?

Are you looking for the most effective way to drive traffic to your website? SEO is one of the best strategies that can help boost visibility and online presence. According to statistics, an estimated 92% of all web traffic comes from organic searches. Leveraging SEO techniques helps optimize websites by improving their architecture and performance, ultimately driving more visitors.

To use SEO effectively, it’s necessary to understand how they work to maximize its potential. Improving a website’s architecture involves optimizing content with targeted keywords relevant to what the page is about and adding meta descriptions and title tags with those same keywords. This allows search engines like Google or Bing to find pages on your site easily when people search about these topics. Ensuring each page loads quickly and efficiently contributes to good SEO performance.

Furthermore, there are various tools available that can be used to measure a website’s overall health, such as Screaming Frog, which allows users to audit both internal links and external ones as well as check for any broken links or missing images/alt text; or Moz Pro which provides insights into keyword rankings along with other helpful information including analytics data on domain authority levels across multiple sites. Utilizing these resources correctly will better understand where improvements need to be made. Hence, to avoid increasing bounce rates too much due to slow loading times or poorly optimized titles/descriptions.

Taking advantage of this type of strategy ensures greater visibility in SERPs (search engine results pages) for target audiences while providing valuable content at the same time – something which has become increasingly important nowadays, given consumer trends have shifted towards wanting more innovative solutions than ever before. It’s, therefore, essential for businesses who want success online to invest in SEO if they haven’t done so already – doing so will allow them to reach goals faster while still maintaining high-quality standards throughout the process.

Adopting a technical approach using proven methods consistently over the long term should bring desired outcomes within a relatively short period, especially if combined with other marketing activities such as paid ads campaigns or social media outreach efforts. Then, leveraging SEO is, without a doubt, a powerful tool worth considering seriously if seeking ways to improve a website’s visibility online successfully over extended periods of time!

Conclusion

As a technical SEO specialist, I have seen countless websites suffer from poor architecture and performance due to inadequate SEO practices. However, any website can be optimized for search engine success with the right strategies and best practices.

Taking proactive steps such as dealing properly with duplicate content and optimizing for voice search can greatly improve your website’s visibility on SERPs. Additionally, using tools like Google Search Console and Ahrefs allows me to measure the ROI of my efforts while driving targeted traffic to your site.

Ultimately, by utilizing these techniques, we can ensure that your website is well-optimized and performing at its highest potential. Therefore, through proper implementation of technical SEO best practices, you should see an improvement in both organic ranking and conversions over time.