If you or your kids have ever watched Sesame Street, then the names Bert and Ernie probably make you think of friendship and silly songs, rather than fierce rivalry. But in the case of Google’s BERT and Baidu’s ERNIE, rivalry is inevitable.

And with the July 2021 unveiling of the latest version of Baidu’s pre-trained language model ERNIE 3.0, the competition is now more cut-throat than ever.

What Is Baidu’s ERNIE 3.0?

On July 5 2021, a team of more than 20 researchers from the Chinese search engine Baidu published a paper called ERNIE 3.0: Large-scale Knowledge Enhanced Pre-training for Language Understanding and Generation.

While the paper’s title isn’t exactly catchy, the findings it reveals are certainly memorable. As the researchers explain, Baidu’s pre-trained language model, ERNIE 3.0, has officially surpassed human performance on the most difficult natural language processing (NLP) benchmark test currently available.

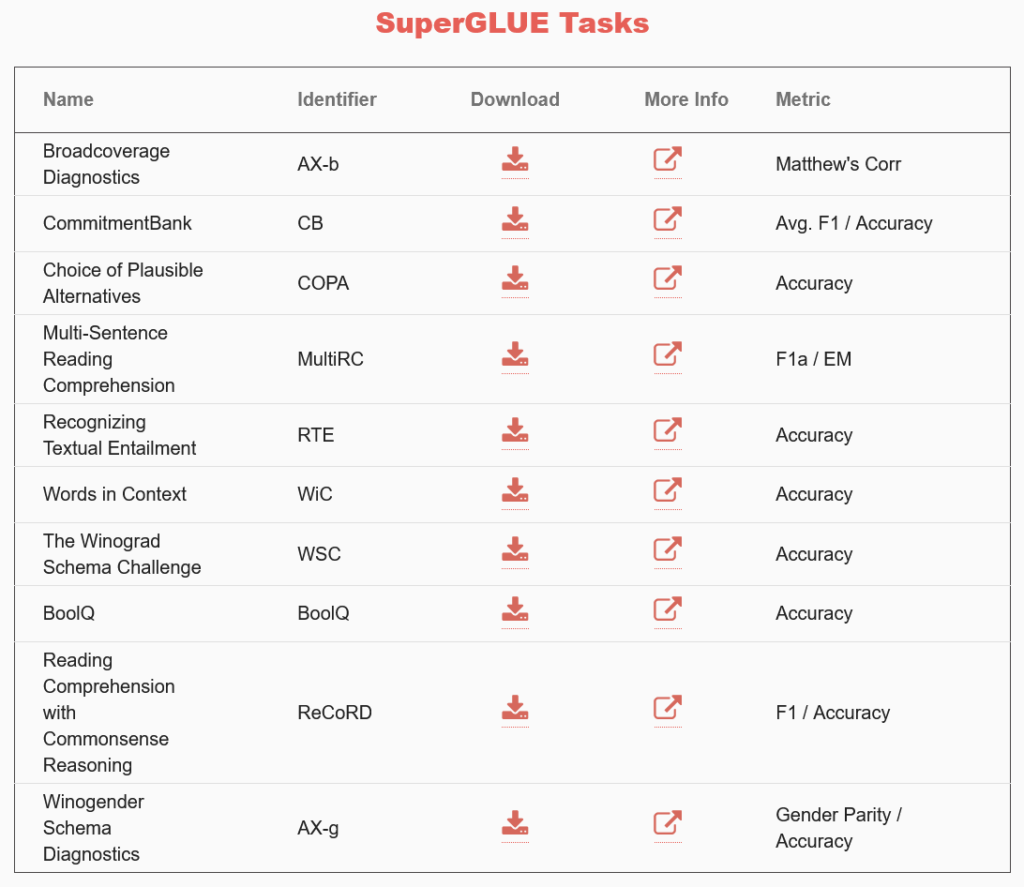

That benchmark test is called SuperGLUE, and it evaluates NLP models by giving them a series of challenging language understanding tasks. These are designed to test skills such as reading comprehension, recognizing textual entailment and identifying words in context:

When humans complete SuperGLUE’s tasks, they generally receive a score of 89.8 percent. But when ERNIE 3.0’s English version completed them, it earned a score of 90.6 percent.

Its Chinese version also outperformed state-of-the-art models on 54 Chinese NLP tasks.

This multilingual proficiency is one of ERNIE 3.0’s most impressive traits. Baidu researchers went to great lengths to ensure such proficiency, compiling a large-scale Chinese text corpora totaling 4TB in size, making it the largest of its kind.

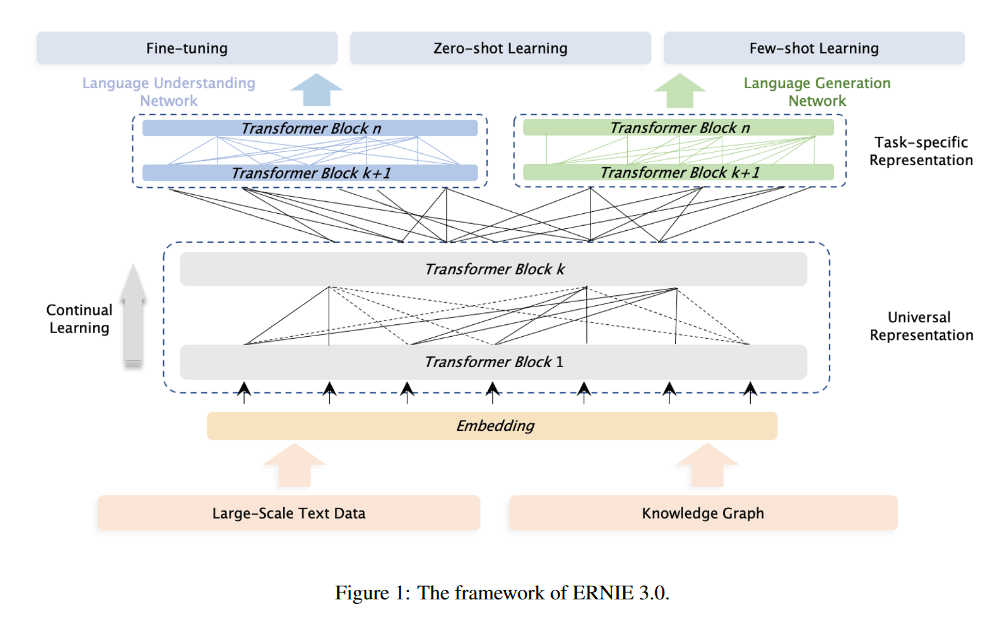

So how does ERNIE 3.0 work, exactly? While its structure is undeniably complex, for the sake of a high-level overview it can be boiled down to three core elements:

- Few-shot learning, i.e. feeding the model a small amount of training data;

- Zero-shot learning, i.e. making the model classify data based on little or no examples; and

- Fine-tuning, i.e. tweaking the already-trained model to improve its performance even further.

All three elements are facilitated by both large-scale text data and a knowledge graph, as shown in the researchers’ diagram of ERNIE 3.0’s framework:

The result of this process is a pre-training model that can correctly understand both English and Chinese at higher levels than humans, and can also translate English to Chinese with a high level of accuracy.

What Is Google’s BERT?

The story of BERT begins very similarly to the story of ERNIE 3.0. On May 24, 2019, a team of four Google researchers published a paper titled BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.

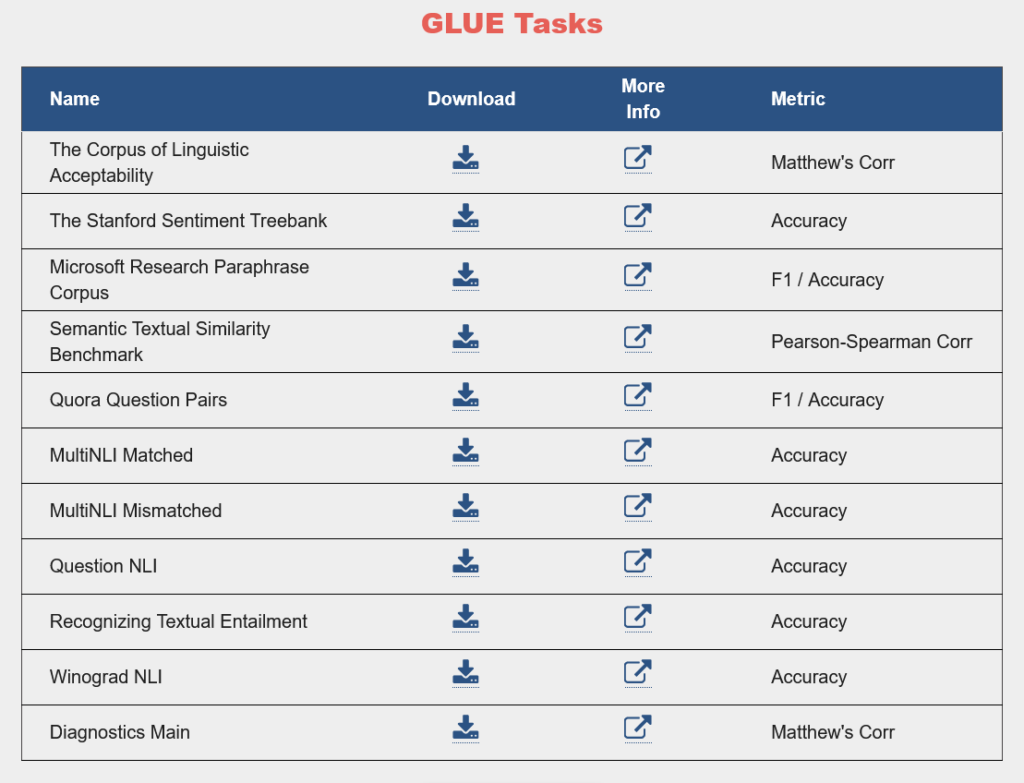

As the researchers revealed, the BERT model had obtained new state-of-the-art results on eleven NLP tasks, including a GLUE score of 80.5 percent (at the time, the GLUE benchmark hadn’t yet been overshadowed by the more difficult SuperGLUE).

Similarly to SuperGLUE, GLUE evaluates NLP models with the help of several tasks designed to test language comprehension:

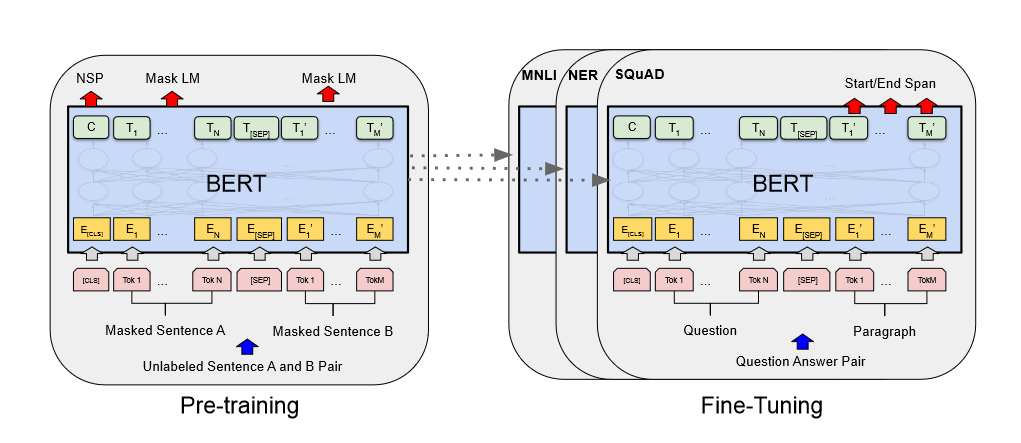

As opposed to ERNIE 3.0’s three core training elements, BERT has two:

- Pre-training, in which the model is trained on unlabeled data; and

- Fine-tuning, in which the model is further trained using labeled data.

The pre-training phase is accomplished by feeding BERT unlabeled sentence A and B pairs, while the fine-tuning phase is accomplished by feeding it question and answer pairs. This is best illustrated by the Google researchers’ own diagram:

For the pre-training data, Google utilized BookCorpus (a collection of unpublished English-language novels that consisted of 800 million words at the time) and English Wikipedia (which consisted of 2.5 billion words at the time).

As such, BERT was initially only applied to English searches. In December 2019, Google announced that BERT was rolling out to more than 70 languages worldwide, though it’s unclear whether the model was trained on datasets in other languages or was simply applied to other languages using existing translation technology.

Will ERNIE Overtake BERT?

With the revelation of ERNIE 3.0’s incredible NLP capabilities, comparisons to BERT are inevitable. But to understand whether ERNIE will overtake BERT, you need to first understand how Baidu and Google compare in terms of the search engine landscape.

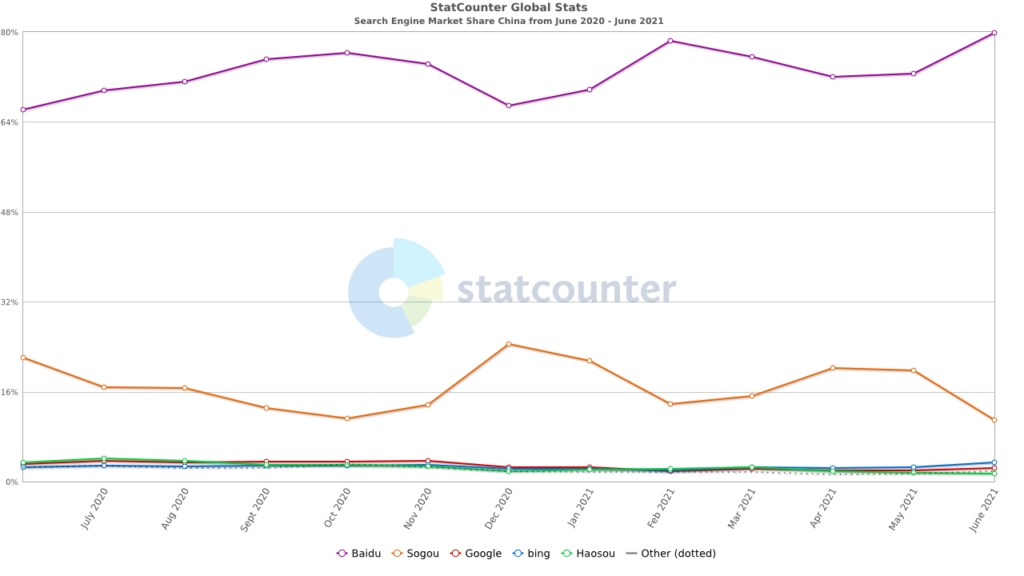

Most crucially, Baidu is the predominant search engine in China while Google holds the same status in the rest of the world. In the global search engine market, Google boasts a market share of more than 90 percent. But in the Chinese search engine market, Baidu has a market share of about 80 percent:

And since China’s population clocks in at nearly 1.5 billion people as of July 2021 (that’s over 18 percent of the world population), Baidu’s power cannot be underestimated.

So in a way, it only makes sense that Baidu has created an NLP pre-training model that rivals Google’s—the company has a vast amount of resources and a team of world-class researchers and engineers on its side.

And given that Baidu is primarily used in a country where less than one percent of the population speaks English, what’s truly remarkable about ERNIE 3.0 is its ability to accurately translate Chinese to English and vice versa.

To sum up the situation, Google doesn’t have to worry about ERNIE 3.0 overtaking its BERT algorithm, at least not for now. As long as Google and Baidu remain in separate markets, there won’t be any direct competition—just the kind of long distance one-upmanship we’re already familiar with.

But if Google should ever enter Baidu’s market or vice versa, both companies will need to get ready for a battle of the NLP models.

How to Optimize for Baidu

If your site is targeting a Chinese audience, then optimizing for Baidu is absolutely necessary. In light of ERNIE 3.0’s release, it’s especially important to ensure that all your site’s content sounds as natural as possible.

For the rest of your Baidu SEO efforts, our tips for Baidu optimization can help—here are some of the most critical:

- Procure high-quality translation to ensure your messages come across loud and clear.

- Optimize each page’s meta description since Baidu will use it as a ranking signal.

- Avoid JavaScript to maximize your site’s crawlability.

- Optimize each image’s alt text since it will be used to determine rankings.

- Avoid controversial topics that could get your site hidden as per China’s online content restrictions.

- Place your most important content first to ensure Baidu’s crawlers can see it.

- Host your site on local servers to improve your site’s loading time.

- Flesh out your backlink profile with links from reputable Chinese websites.

BERT and ERNIE Are Neck and Neck

Baidu may still be smaller than Google on a global level, but it’s been honing its high-tech tools for over 20 years and the impressive capabilities of ERNIE 3.0 are proof of that. And as the number one search engine of the world’s most populous country, it certainly has plenty of experience operating on a large scale.

All that is to say that while Baidu may not be poised to enter the Western market just yet, the fact that it’s created an NLP machine learning model as advanced as BERT speaks volumes. To find out what that means for Google, we’ll just have to stay tuned.

Image credits

Screenshots by author / July 2021

StatCounter / June 2021