Semrush’s suite of tools is unrivaled in terms of keyword and competitor research, but did you know it can help you enhance your site’s technical SEO too?

One of its most powerful features for doing so is Site Audit, a tool that provides a comprehensive high-level overview of any site’s technical SEO health.

Site Audit in a Nutshell

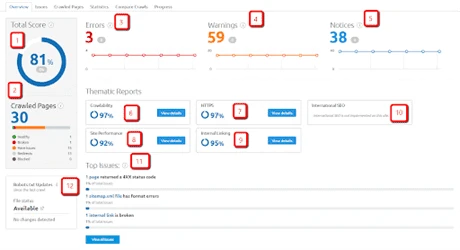

Once you’ve entered your site’s URL into the Site Audit tool and given it a minute or two to complete a full crawl of your site, its control panel will look something like this:

Let’s look at each of these sections and what they mean:

- Total Score: This is the total combined score that Semrush assigns based on the issues that are correct vs. the issues that you need to fix. While ambiguous, it does give you a good bird’s-eye overview of the overall health of your site, and where it’s at for fixes.

- Crawled Pages: Below the total score, we can see that it has a section called crawled pages. This total number of crawled pages in the audit.

- Errors: This section will generate reports that show the amount and type of errors that Semrush was able to uncover. The number here indicates the number of issues of the highest severity that Semrush discovered when it crawled your site.These types of errors include redirects, 4xx errors, 5xx errors, internal broken links, formatting errors in your XML sitemap file, AMP-related issues if you have the business subscription tier, pages that don’t have title tags, pages that have duplicate titles, pages that couldn’t physically be crawled, internal broken images, and much more.

- Warnings: These are not exactly errors but they are issues that can impact your crawlability, indexability, and rankings. They are issues that are more of a medium severity and as such they should be paid attention to.These types of errors include things missing meta descriptions, pages that are not compressed properly, mixed protocol links (HTTPS links on HTTP pages and vice-versa), no image alt text, JS and CSS files that are too large, external broken links, short title tags, title tags that are too long, pages with duplicate H1 headings, pages that don’t have an H1 heading tag, and more.

- Notices: while these are not actual errors, they are issues that are necessary to fix, and Semrush

recommends that you fix them. Depending on who you talk to in the SEO industry, some may or may not agree with the issues listed here. Don’t let that stop you from getting them fixed, as while they may not be direct ranking factors, they can contribute to rankings indirectly.These notices include issues like weighty permanent redirects on-site, subdomains that don’t support HSTS (a critical component to a successful HTTPS transition), pages that are blocked from crawling, URLs that are too long, whether or not the site has a robots.txt file, hreflang mismatch issues with language, orphaned pages within sitemaps, broken external JS and CSS files, pages needing more than three clicks to reach and many more. - Crawlability: Whether or not your site is crawlable has very important implications to your rankings. In fact, Gary Illyes said in a Reddit post that, failing anything else, SEO practitioners should “MAKE THAT DAMN SITE CRAWLABLE.” His choice to use all caps speaks for itself.

In this section, Semrush addresses several critical issues that can impact crawlability if they are present on a large enough scale, and gives you suggestions on how to fix them. - HTTPS: This page will tell you how well your HTTPS implementation is, as well as what you will need to fix to ensure that your security certificate, server and website architecture is up to snuff.

- Site Performance: This is a critical SEO factor to get right. When your site performs well, you can expect an increased ranking benefit as a result, especially when you have great content and the links to support it.

- Internal Linking: When you create an effective internal linking structure, you help both users and search engine bots navigate your site with ease. That means a better user experience for visitors and more efficient indexing for search engines.

- International SEO: If your site has visitors from outside your country of residence, it’s important to ensure that your international SEO is in tip-top shape. This section helps you do just that by providing an overview of your site’s hreflang tags, links, and issues.

- Top Issues: This section is fairly self-explanatory. Here, you’ll see a quick rundown of your site’s most pressing issues, whether they have to do with crawlability, HTTPS implementation, performance, or any other area covered in Semrush’s audit.

- Robots.txt Updates: Your site’s robots.txt file serves to tell search engine crawlers how to crawl its pages. So, it’s easy to see how it can significantly affect website indexation. Luckily, this section will notify you of any changes made to the file since the previous crawl.

To better understand the kinds of technical SEO insights the Site Audit tool can provide, we’ll take a closer look at the Crawlability, HTTPS, Site Performance, and Internal Linking sections.

Crawlability

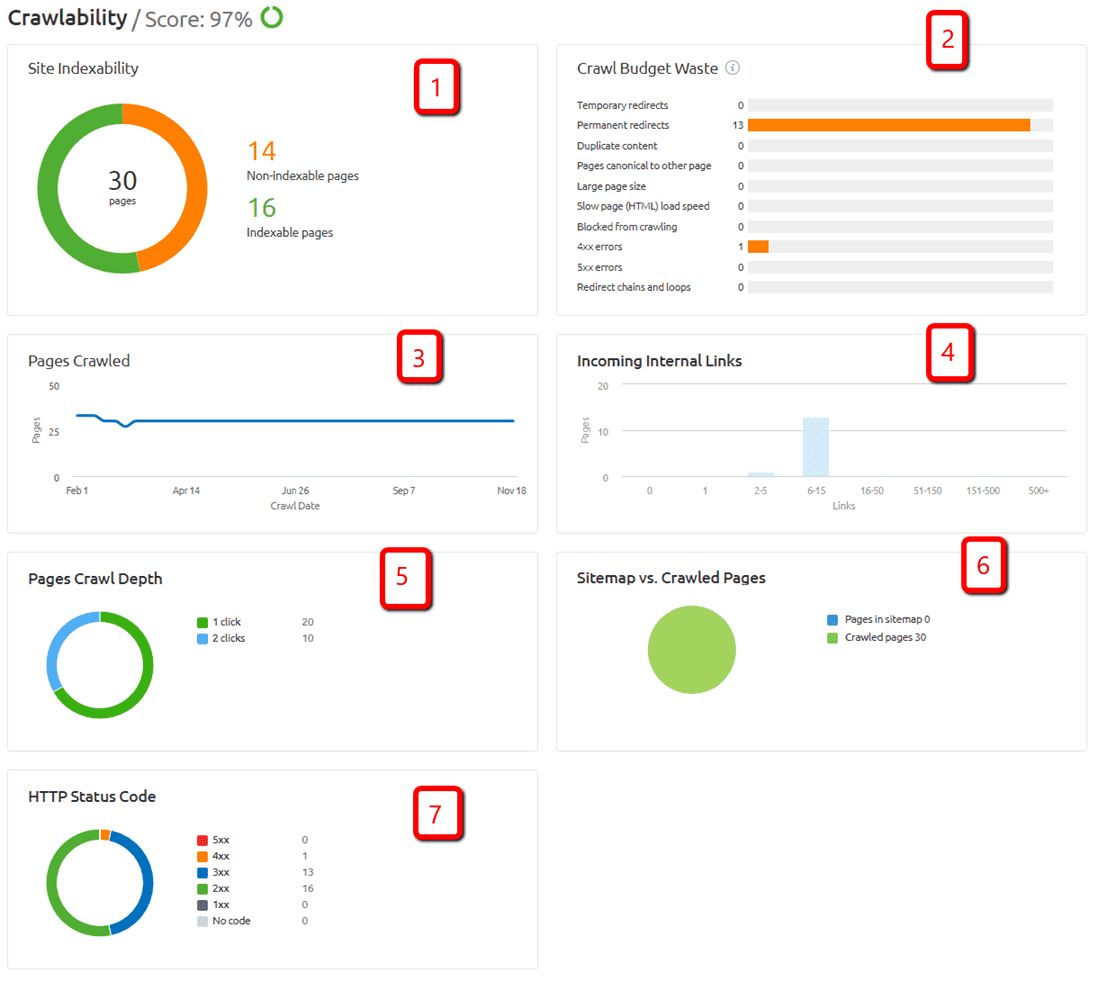

Click on the Crawlability section and you’ll soon see a collection of essential crawl-related metrics:

As you can see, those metrics include:

- Site Indexability: This score gives you a ratio of your pages that can’t be indexed to the pages that can be indexed.

- Crawl Budget Waste: This graph gives you ten reasons that your crawl budget is currently being wasted, including temporary redirects, permanent redirects, duplicate content and more.

- Pages Crawled: This graph gives you an overall view of how many pages were crawled, along with the timeframe of the crawl.

- Incoming Internal Links: Not much to say here, except this graph shows you incoming links to the site. You can click on a bar within the graph to view more data, which includes unique page views of these pages (assuming you have your Google Analytics account linked with Semrush), their crawl depth, and the number of issues for the page, along with link data like incoming and outgoing internal and external links.

- Page Crawl Depth: This table will show you the total number of pages on the site and how many clicks it takes to get to each page, separated by number. This is useful for diagnosing crawl depth issues.

- Sitemap vs. Crawled Pages: This number gives you the pages in the sitemap compared to the pages that have actually been crawled. This will help you assess whether or not there are any issues with crawlability by way of the sitemap.

- HTTP Status Code: This section provides a graph with a total tally of all the possible errors on the site, complete with the following: 5xx errors, 4xx errors, 3xx redirects, 2xx OK status codes, 1xx status codes, and pages that return no status.

HTTPS

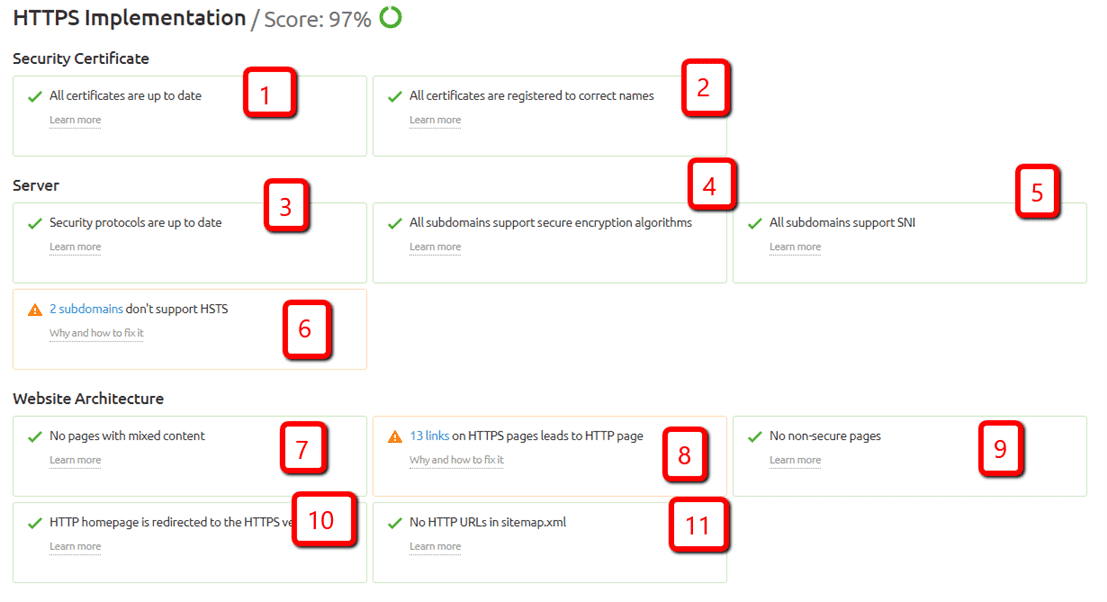

From the overview page, navigate to the HTTPS section to see a variety of security-, server- and architecture-related metrics:

Specifically, you’ll see whether:

- All certificates are up to date: With this issue, you want to make sure that your certificates are updated properly. Outdated certificates, especially those that have not been renewed, can result in a site not displaying or loading properly.

- All certificates are registered to correct names: This means all certificates must be registered to the same domain name that is being displayed in the address bar. If they’re not, then you risk the page not being displayed in the browser when it detects that the domain name is not correct in the Secure Sockets Layer (SSL) certificate.

- Security protocols are up to date: This issue refers to running outdated security protocols, like old SSL or old Transport Layer Security (TLS). These are big security risks for your site, because older protocols carry greater risks in terms of being hacked. It is considered a best practice to always implement the latest security protocol version.

- All subdomains support secure encryption algorithms: When secure encryption algorithms are not properly supported, subdomains can show up as insecure. This can usually be rectified by making sure that all subdomains are included in your SSL certificate when you purchase it.

- All subdomains support SNI: Server Name Indication (SNI) is an extension of TLS which enables users to reach the exact URL they’re trying to get to, even if it shares an IP address with other domains on the same web server.

- All subdomains support HSTS: HTTP Strict Transport Security (HSTS) works to let web browsers know that they are allowed to talk to servers only when HTTPS connections are in place. This way, you don’t inadvertently serve unsecured content to your audience.

- No pages have mixed content: This refers to any pages with content being served by mixed security protocols. An example of this error would be pages that have images being served by HTTP protocol instead of HTTPS protocol, despite belonging to an HTTPS site.

- Links on HTTPS pages lead to HTTP pages: This is another example of mixed content, specifically when links on HTTPS pages lead to those with HTTP protocols.

- No non-secure pages exist: Non-secure pages can cause problems on a site that is otherwise secure, again because of the mixed protocol issue mentioned above.

- The HTTP homepage is redirected to the HTTPS version: When implementing HTTPS, it is considered a standard best practice to redirect all HTTP pages to their HTTPS counterparts. You can do this by adding redirects via cPanel, your .htaccess file, a WordPress redirect plugin or a similar tool, depending on your preference and level of technical ability.

- No HTTP URL is in the sitemap.xml: You should not have any mixed URL protocols in your Sitemap either because this triggers duplicate content issues by showing two URLs for the same content (one URL In the sitemap, and another one on the site).

You’ll also confuse the search engine spiders by having your content set up this way. So it’s a good idea to always make sure that your site is 1:1, whether it is HTTP or HTTPS.

Site Performance

Site performance is a critical SEO factor to get right. When your site performs well, you can expect increased rankings as a result, especially when you have great content and the links to support it.

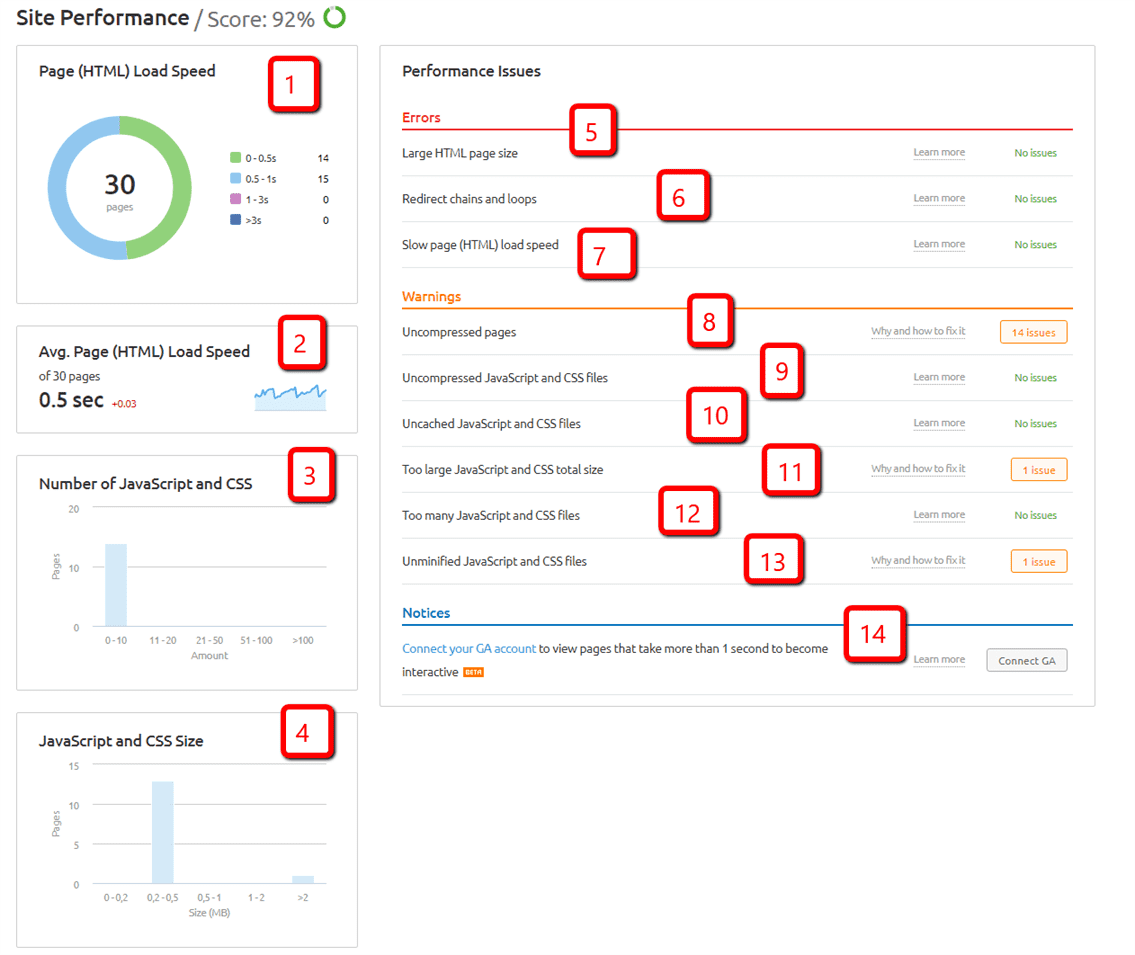

To that end, the Site Audit tool’s Site Performance section provides a wealth of critical performance-related information:

We’ve got lots of metrics to unpack here:

- Page (HTML) Load Speed: It’s considered an SEO best practice nowadays to adto improve page speed until each page loads in less than five seconds at the most.This item in the site audit report shows groupings of pages and their range of page speed, so you can diagnose and troubleshoot any page speed issues that may come up in an audit.

- Average Page (HTML) Load Speed: This section details the average page load speed of a group of 30 pages on your site. For this site, the average page load speed across 30 different pages is 0.5 seconds.

- Number of JavaScript and CSS: This section shows an average number of JS and CSS files spread across your site. As a rule, if you want an extremely lightweight site, you shouldn’t have more than three JavaScript (JS) or Cascading Style Sheets (CSS) requests combined. This is because server requests are a big deal in terms of site performance. If your server is from the stone age, you don’t want to overload it with empty requests. Keeping JS and CSS requests to a minimum can help avoid this.

- JavaScript and CSS size: This section gives you an idea of a group of pages on-site and what range the size of your JS and CSS files fall into. When it comes to JS and CSS file size, you can never be too careful. Maintaining the look of your page is important, but it is possible to do so while keeping things lean and mean enough for serious performance.To reduce the size of JS and CSS files, you can try using fewer fonts or more standard fonts, using CSS in place of images, using following modern coding practices such as CSS Flexbox and CSS Grid.

- Large HTML Page Size: When you have a large HTML page size, you create bottlenecks in potential site performance. While Semrush’s page size for reporting is approximately two MB, I recommend ensuring that your page size does not exceed 100 – 200 KB. The smaller, the better, depending on the type of site. Even at the upper end of averages, you should never have a page that exceeds one MB. If you do, then something is wrong. Whether there are many plugins being loaded at once that you don’t need or mismanaged database connections that need to be cleaned up, you must get to the bottom of the bottleneck that is causing your page size to be more than 1 MB. Trust me, your page speed will thank you.

- Redirect Chains and Loops: This section reports redirect chains and loops that are causing major issues. The standard feedback in most cases of redirects is to make the URL a 301 permanent redirect that redirects to another URL that is similar in context. In most cases, redirects are fine when they are done correctly. But, when they are done incorrectly, they can have disastrous results. Those results can manifest themselves in a way that is not so obvious. For example, if Screaming Frog shows redirect chains, but you can’t spot any in a browser, your site likely serves requests differently to crawlers and browsers. While it doesn’t look quite as obvious as other errors, you should still work to fix this issue as soon as humanly possible during audit implementation.

- Slow Page (HTML) Load Speed: Here is where you’ll see any alerts about pages that have been flagged as loading too slowly. Since the probability of users bouncing increases by more than 30 percent as a page’s load time rises from one to three seconds, it’s crucial to quickly sort out the issues shown here.

- Uncompressed Pages: This is triggered by Semrush, per their description, when the content-encoding entity is not present in the response header. Page compression is a method of shrinking the file size of your pages using the same data, plugins, and the like. When pages are uncompressed, this leads to slower page load times. In addition, user experience tends to suffer as a result, which can eventually lead to lower search engine rankings. Pages compression helps the user experience because it allows the browser to get these files quicker, and reduces the time it takes to render the code. To start compressing pages, you can enable GNU zip (GZIP) compression or use a compression plugin for WordPress.

- Uncompressed JavaScript and CSS Files: These issues register in Semrush when compression is not present in HTTP response headers. The end result of compressing these JS and CSS files is that your overall page size will decrease, along with your page load speed. On the flip side, any uncompressed JS or CSS files will unnecessarily increase both page size and speed. One easy way to ensure all your JS and CSS files are compressed is to use an external application to upload them. That’s because many such applications will automatically compress your files for you.

- Uncached JavaScript and CSS Files: To avoid this issue appearing in Semrush, it’s necessary to make sure that caching is specified in the page’s response headers. The process of caching your JS and CSS files allows you to store and reuse these resources on the user’s machine, avoiding the step of having to download them yet again upon the reloading of your page. The browser uses less data, which in turn will help to make your page load faster.

The simplest way to enable caching is to install a WordPress caching plugin. - Too Large JavaScript and CSS Total Size: When SEMRush reports this, they look at whether or not the file size of the JS and CSS files on your page exceeds 2 MB. When your page exceeds 2 MB overall, that’s when you begin to have problems. Remember earlier where the overall page size should not exceed 200 kb? If your JS and CSS files themselves exceed two MB, then that’s two megs beyond the initial file size of your page! Of course, as websites get larger and more dynamic, it is likely that a point will come where 2 MB is not out of the question. But, I don’t think we’re there yet. Not by a long shot. In my experience, most pages don’t need to exceed 200 kb to keep things running smoothly on today’s technology. And if more extreme optimization measures are taken, depending on the type of site you run, you can reach the elusive 32kb average page size from a decade ago. As a much more conservative web developer in this area, I prefer five total files to 25 unnecessary JS and CSS files that aren’t ever used and perform mundane tasks.

- Too Many JavaScript and CSS Files: SEMRush reports on this issue when there are more than 100 JS and CSS files on a page. I think this is far too liberal of a number to report on. In fact, CSS-Tricks recommends a maximum of three CSS files for any given site, and the same principle applies to JS files. If you have hundreds of plugins doing their thing, and only 3 or 4 are necessary, you can save a ton of overhead in the server requests department by combining these files. CSS sprites are the preferred method of using CSS because combining CSS in this way reduces your CSS files down to one as far as the server requests are concerned. This reduces your server requests considerably, especially when you have many CSS files loading all at once. The same goes for JS files too.

- Unminified JavaScript and CSS Files: There is yet another process that SEMRush reports on when your files don’t utilize it—that of minification. What minification does is removes things like blank lines and unnecessary white space from your JS and CSS files. The final minified file will provide exactly the same functionality as the original files, and help to reduce the bandwidth of your service requests. This, in turn, will help improve site speed. Luckily, minification is anything but difficult. With tools like CSS Minifier, JavaScript Minifier, and Minifier.org, it’s as easy as copy + paste.

- Notices: In this section, Semrush will serve you any notices it sees fit. In our example, it’s encouraging us to connect our Google Analytics account to learn more about interactive pages.

Internal Linking

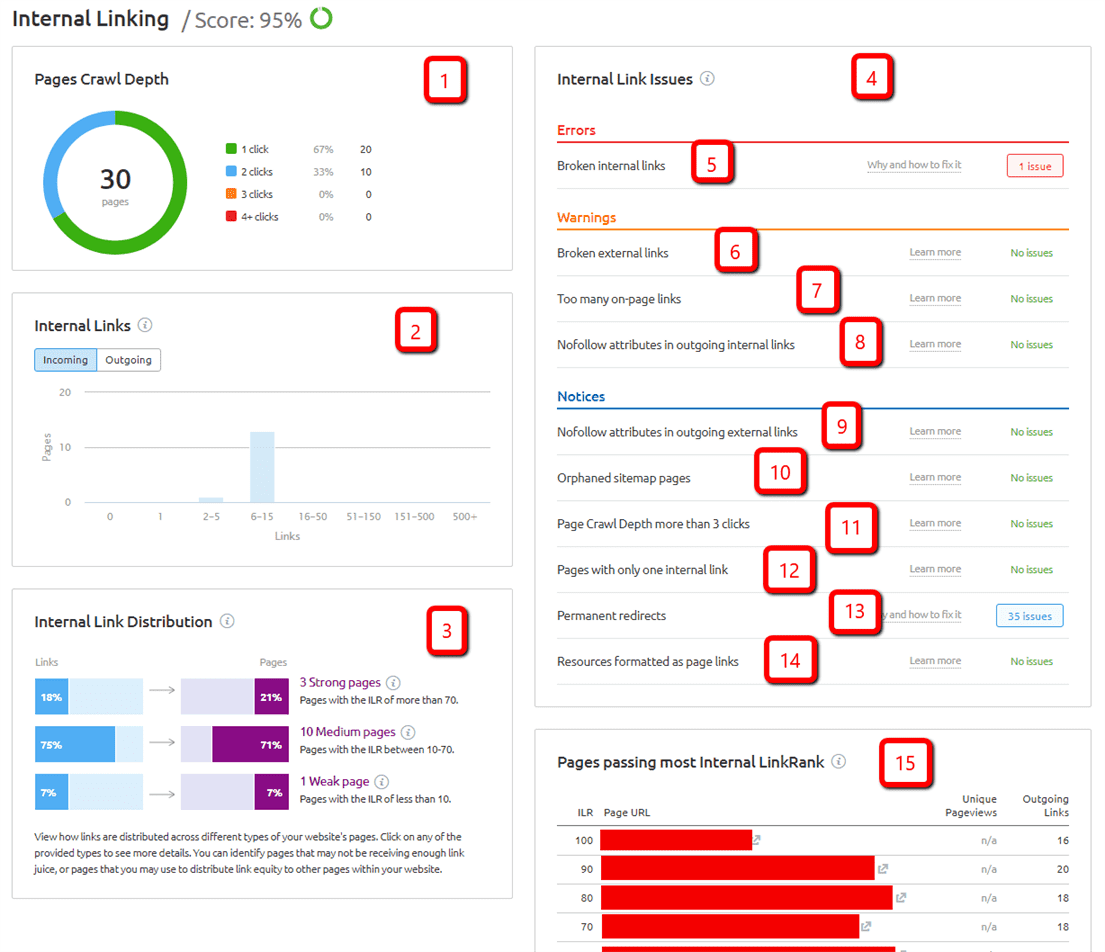

In one click, the Internal Linking section of the Site Audit tool reveals a wealth of invaluable information about a site’s internal linking system:

Each subsection holds a great deal of information in its own right:

- Pages Crawl Depth: This section shows you a range of the percentage of pages that have a certain crawl depth. Crawl depth refers to how many clicks it takes a person to get from the home page to a specific page. This gives you a good overall picture of how your site is currently structured.There are several best practices and opinions in SEO on arranging your site structure. Some SEOs believe that a siloed site structure is best, while others prefer dumping all of their content in the main directory and adhering to a flat architecture, making sure that most pages are within two to three clicks of the home page. So which is best? As Google’s John Mueller said in a Webmaster Central office hours hangout, what’s most important is that a site’s architecture makes it clear to crawlers how pages are related to one another.

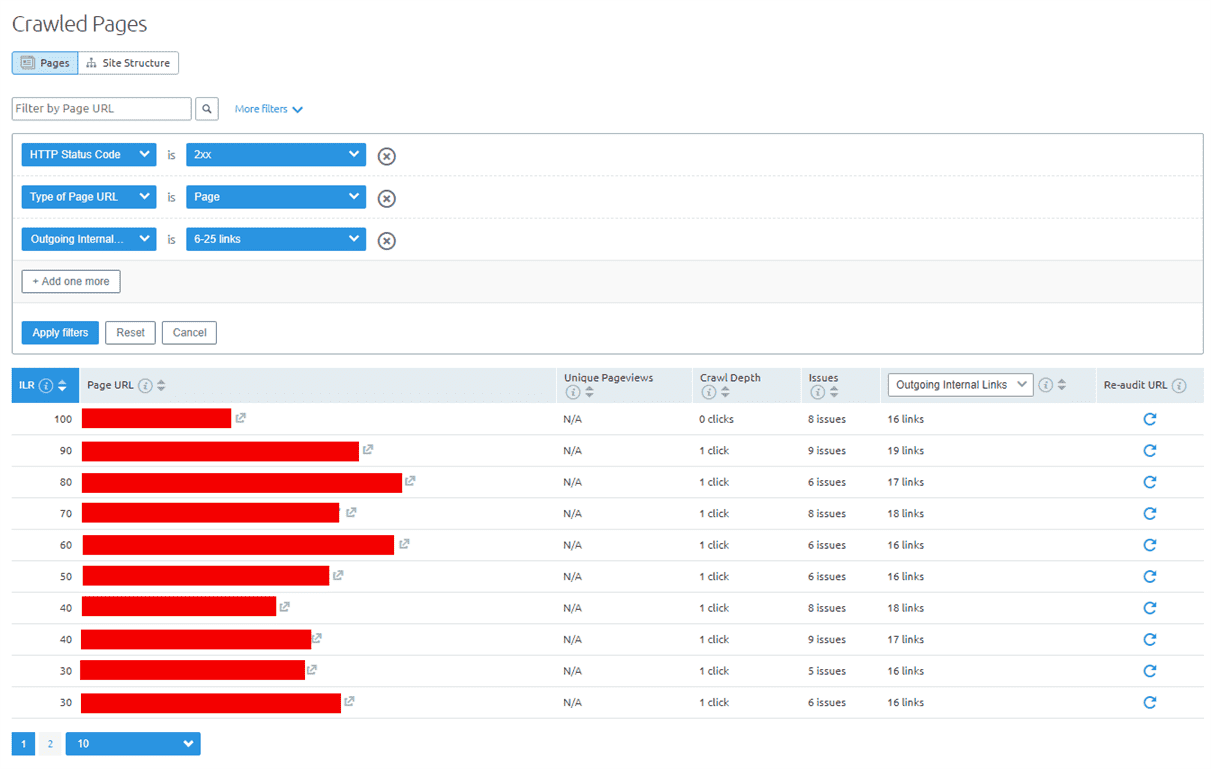

- Internal Links: This section shows you a high-level overview of the quantities of pages on your site and how they are linked. You can use it to figure out whether or not you have orphaned pages, pages that have one incoming internal link, or if you have any pages where the quantity of the outgoing links is too high. If you click on the light blue bar within this section, you’ll get a more detailed look of your site’s internal linking structure:

Under the Pages tab, you can see each page’s unique views, crawl depth and issues. Under the Site Structure tab, you’ll be presented with an overview of each directory’s URLs and issues. - Internal Link Distribution: In this section, you are able to examine the internal link distribution across your website. This will enable you to tweak link equity distribution and make sure that the pages you want to receive link equity are receiving it.

- Internal Link Issues: This section will show you all the issues that are impacting your internal links. Cleaning up your internal links in this fashion is important because it is a quality signal.If your links are outdated, broken, have mismatched content (like HTTP links on an HTTPS page), don’t link to the right pages (i.e. you link to one site when you mean another) or have any other issues, this screams “low quality” to Google. So, any internal link issues revealed here should be resolved as soon as possible. Common internal link issues include links that are wrapped in JavaScript, as well as those that point to pages that return 4xx or 5xx errors.

- Broken Internal Links: If you have broken internal links on your site, this is an issue that you should fix. When internal links are broken, they lead users to non-existent pages, and this is bad for users and search engines alike. For users, it’s a problem because they can’t find the information they’re looking for, and the user experience suffers as a result. Search engines don’t like it because they are unable to crawl your site because of these broken links. When it happens enough, they are known as crawler traps, trapping the search engine spiders within your site.

- Broken External Links: This issue is similar to broken internal links. Except, the external links are the links that are broken.

The reason why this can be an issue—especially if you have many of them on your site—is that when search engine spiders crawl your site, and they see so many broken links, whether internal or external, they may think that your site is not maintained much (if at all).

And if it’s not maintained, why would it be worth ranking highly in the SERPs? - Too Many on-Page Links: Here, Semrush will report if a page has too many on-page links. Their magic number is 3,000 links, but I disagree. As Google’s Matt Cutts said in a Search Central video, you should keep each page’s number of links “reasonable” and bear in mind that the more links there are, the less link equity each one will pass on. Also consider that unless a page is clearly intended to serve as a link directory, a large number of links can appear spammy in the eyes of users.

- Nofollow Attributes in Outgoing Internal Links: This section will display any Nofollow attributes found in outgoing internal links. Nofollow is an attribute that you can add to a link’s tag if you don’t want search spiders to follow through to the link. It also doesn’t pass link equity to other pages, so the best rule of thumb is to not use it unless you have a good reason to.

- Nofollow Attributes in Outgoing External Links: Nofollow outgoing external links are likely just as damaging as nofollow internal links when used incorrectly. Again, these don’t pass any link equity, and they tell crawlers not to follow the links. As such, they should only be used in very rare cases where you may have a link that you don’t want to pass a value to. In Google’s own words, only use nofollow “when other values don’t apply, and you’d rather Google not associate your site with, or crawl the linked page from, your site.

- Orphaned Sitemap Pages: Any page appearing in your sitemap that is not linked to from another internal page on-site is considered an orphaned sitemap page. Semrush states that these can be a problem because crawling outdated orphaned pages too much will waste your crawl budget. Their recommendation is that, if any orphaned page exists in your sitemap, and if it has significant, valuable content, that page should be linked to immediately.

- Page Crawl Depth More Than Three Clicks: If it takes more than three clicks to reach any given page from the site’s homepage, the site will be harder to navigate for both users and search engine bots.

So, any pages appearing in this section should be brought within two or three clicks of the homepage. - Pages with Only One Internal Link: The ongoing purview regarding this issue is that the fewer internal links point to a given page, the fewer visits that page is going to get.

With that in mind, be sure to add more internal links to any pages Semrush includes in this section. - Permanent Redirects: 301 redirects (a.k.a. permanent redirects) are used all the time, whether for redirecting users to a new page or implementing HTTP to HTTPS transitions.

However, they can become major issues when the oversaturation of redirects cause bottlenecks on the server. They can also pose problems when redirect loops cause the page to not be displayed.

To avoid either of those scenarios, be sure to only use permanent redirects when it’s appropriate and you intend to change the page’s URL as it appears in search engine results. - Resources Formatted as Page Links: Pages are defined as any page on your site that is a physical page with resources on it, while resources are the individual resources (such as images or videos) linked to from the physical page. When you format a resource as a page link, this has the potential to confuse crawlers. As a member of the Semrush team explained on Reddit, an alert in this section “is only a notice, so it is not currently negatively affecting the site, but fixing it could potentially help.

- Pages Passing Most Internal LinkRank: Semrush’s metric of Internal LinkRank (ILR) measures “the importance of your website pages in terms of link architecture.” In this section, you can quickly see which pages are passing on the most ILR.In other words, you can see which pages are the most important when it comes to your site’s link architecture.

Site Audit: Your Secret Weapon in the Fight for Better Technical SEO

For those who aren’t familiar with technical SEO, it can present a number of tricky challenges that take lots of research and practice to overcome. In fact, even seasoned technical SEO practitioners can become bogged down by tedious tasks that take up precious time and energy.

That’s why Semrush’s Site Audit tool is so robust: Whether you’re a technical SEO pro or are still learning the ropes, Site Audit makes it easy to see where your site stands, how it can be improved, and what you should work on first. From security certificates to internal linking, you’ll be able to handle any technical SEO issues that come your way.